Scientists discovered that by adding 30 lines to the Linux operating system, they could dramatically reduce the amount of energy that data centers consume.

Scientists discovered that a species of fungus can sense its surroundings and make strategic decisions.

A new study suggests that the fungus Phanerochaete velutina might have a surprising ability — recognizing shapes and adjusting its growth strategy accordingly.

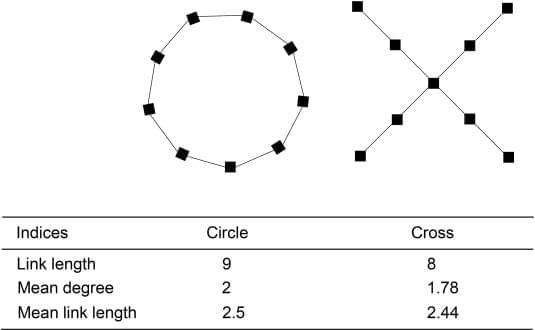

Researchers from Tohoku University conducted experiments where the fungus was placed in different spatial arrangements and observed how it spread. Rather than expanding indiscriminately, the mycelium formed connections, retracted excess strands, and focused its foraging in strategic directions.

In circular arrangements, tendrils avoided the center, while in cross-shaped formations, the outermost blocks served as primary hubs for exploration.

This behavior hints at a level of perception and decision-making previously unrecognized in fungi.

The findings add to growing evidence that fungi exhibit a form of primitive intelligence, capable of memory, learning, and problem-solving. Scientists believe this research could expand our understanding of cognition in non-animal organisms, as well as inspire innovations in bio-computing.

The ability of fungal networks to process information and optimize resource allocation without a brain challenges conventional ideas about intelligence. As studies continue, fungi may offer fascinating insights into decentralized decision-making and ecological adaptation.

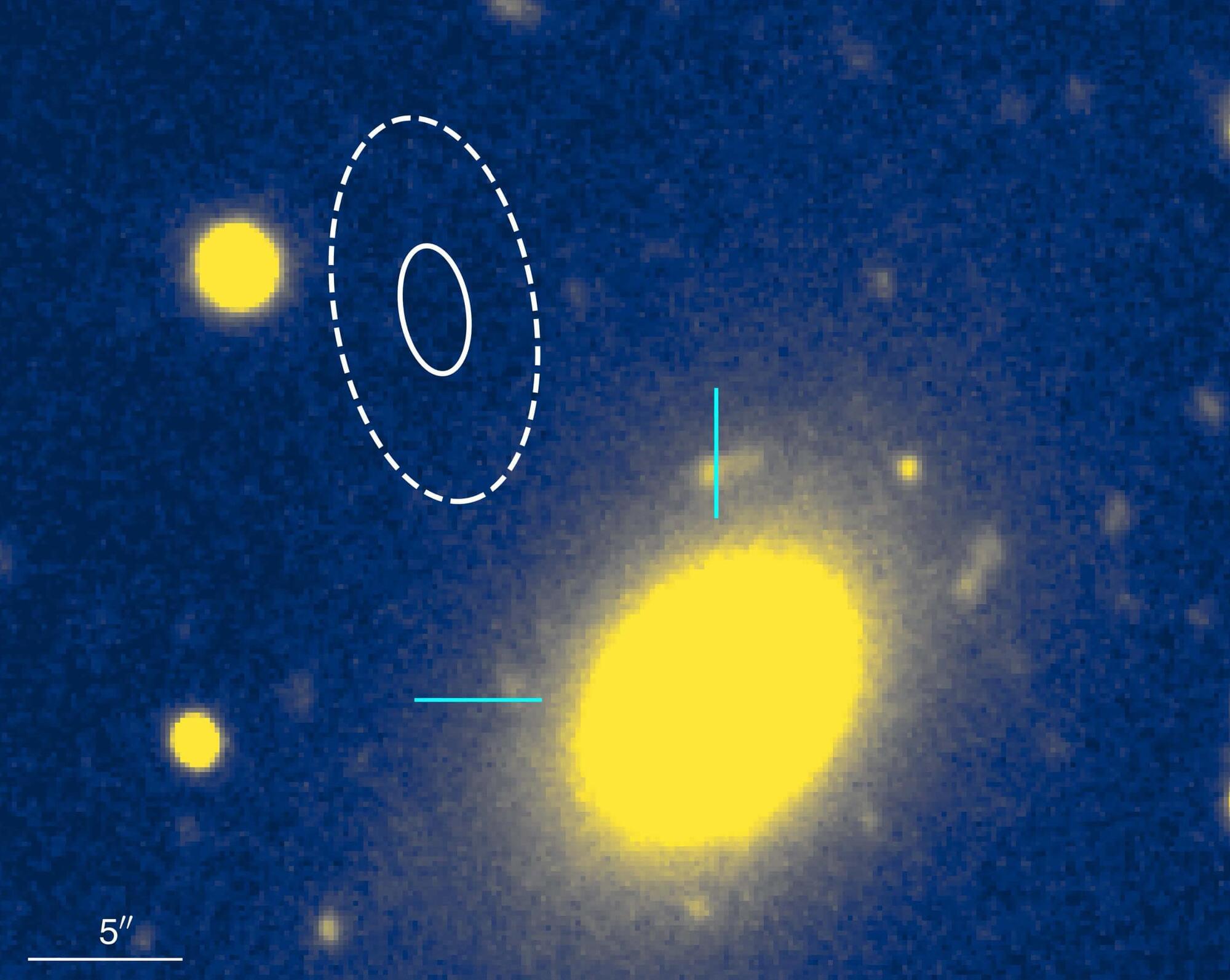

Astronomer Calvin Leung was excited last summer to crunch data from a newly commissioned radio telescope to precisely pinpoint the origin of repeated bursts of intense radio waves—so-called fast radio bursts (FRBs)—emanating from somewhere in the northern constellation Ursa Minor.

Leung, a Miller Postdoctoral Fellowship recipient at the University of California, Berkeley, hopes eventually to understand the origins of these mysterious bursts and use them as probes to trace the large-scale structure of the universe, a key to its origin and evolution. He had written most of the computer code that allowed him and his colleagues to combine data from several telescopes to triangulate the position of a burst to within a hair’s width at arm’s length.

The excitement turned to perplexity when his collaborators on the Canadian Hydrogen Intensity Mapping Experiment (CHIME) turned optical telescopes on the spot and discovered that the source was in the distant outskirts of a long-dead elliptical galaxy that by all rights should not contain the kind of star thought to produce these bursts.

Most metals expand as their temperature rises. The Eiffel Tower, for example, stands about 10 to 15 centimeters taller in summer than in winter due to thermal expansion. However, this effect is highly undesirable for many technical applications. As a result, researchers have long sought materials that maintain a constant length regardless of temperature. One such material is Invar, an iron-nickel alloy known for its extremely low thermal expansion. The physical explanation for this property, however, remained unclear until recently.

Now, a collaboration between theoretical researchers at the Vienna University of Technology (TU Wien) and experimentalists at the University of Science and Technology Beijing has led to a significant breakthrough. Using complex computer simulations, they have unraveled the invar effect in detail and developed a so-called pyrochlore magnet—an alloy with even better thermal expansion properties than Invar. Over an exceptionally wide temperature range of more than 400 Kelvins, its length changes by only about one ten-thousandth of one percent per Kelvin.

Time, by its very nature, is a paradox. We live anchored in the present, yet we are constantly traveling between the past and the future—through memories and aspirations alike. Technological advancements have accelerated this relationship with time, turning what was once impossible into a tangible reality. At the heart of this transformation lies Artificial Intelligence (AI), which, far from being just a tool, is becoming an extension of the human experience, redefining how we interact with the world.

In the past, automatic doors were the stuff of science fiction. Paper maps were essential for travel. Today, these have been replaced by smart sensors and navigation apps. The smartphone, a small device that fits in the palm of our hand, has become an extension of our minds, connecting us to the world instantly. Even its name reflects its evolution—from a mere mobile phone to a “smart” device, now infused with traces of intelligence, albeit artificial.

And it is in this landscape that AI takes center stage. The debate over its risks and benefits has been intense. Many fear a stark divide between humans and machines, as if they are destined for an inevitable clash. But what if, instead of adversaries, we saw technology as an ally? The fusion of human and machine is already underway, quietly shaping our daily lives.

When applied effectively, AI becomes a discreet assistant, capable of anticipating our needs and enhancing productivity. Studies suggest that by 2035, AI could double annual economic growth, transforming not only business but society as a whole. Naturally, some jobs will disappear, but new ones will emerge. History has shown that evolution is inevitable and that the future belongs to those who adapt.

But what about AI’s role in our personal lives? From music recommendations tailored to our mood to virtual assistants that complete our sentences before we do, AI is already recognizing behavioral patterns in remarkable ways. Through Machine Learning, computer systems do more than just store data—they learn from it, dynamically adjusting and improving. Deep Learning takes this concept even further, simulating human cognitive processes to categorize information and make decisions based on probabilities.

But what if the relationship between humans and machines could transcend time itself? What if we could leave behind an interactive digital legacy that lives on forever? This is where a revolutionary concept emerges: digital immortality.

ETER9 is a project that embodies this vision, exploring AI’s potential to preserve interactive memories, experiences, and conversations beyond physical life. Imagine a future where your great-grandchildren could “speak” with you, engaging with a digital presence that reflects your essence. More than just photos or videos, this would be a virtual entity that learns, adapts, and keeps individuality alive.

The truth is, whether we realize it or not, we are all being shaped by algorithms that influence our online behavior. Platforms like Facebook are designed to keep us engaged for as long as possible. But is this the right path? A balance must be found—a point where technology serves humanity rather than the other way around.

We don’t change the world through empty criticism. We change it through innovation and the courage to challenge the status quo. Surrounding ourselves with intelligent people is crucial; if we are the smartest in the room, perhaps it’s time to find a new room.

The future has always fascinated humanity. The unknown evokes fear, but it also drives progress. Many of history’s greatest inventions were once deemed impossible. But “impossible” is only a barrier until it is overcome.

Sometimes, it feels like we are living in the future before the world is ready. But maturity is required to absorb change. Knowing when to pause and when to move forward is essential.

And so, in a present that blends with the future, we arrive at the ultimate question:

What does it mean to be eternal?

Perhaps the answer lies in our ability to dream, create, and leave a legacy that transcends time.

After all, isn’t digital eternity our true journey through time?

__

Copyright © 2025, Henrique Jorge

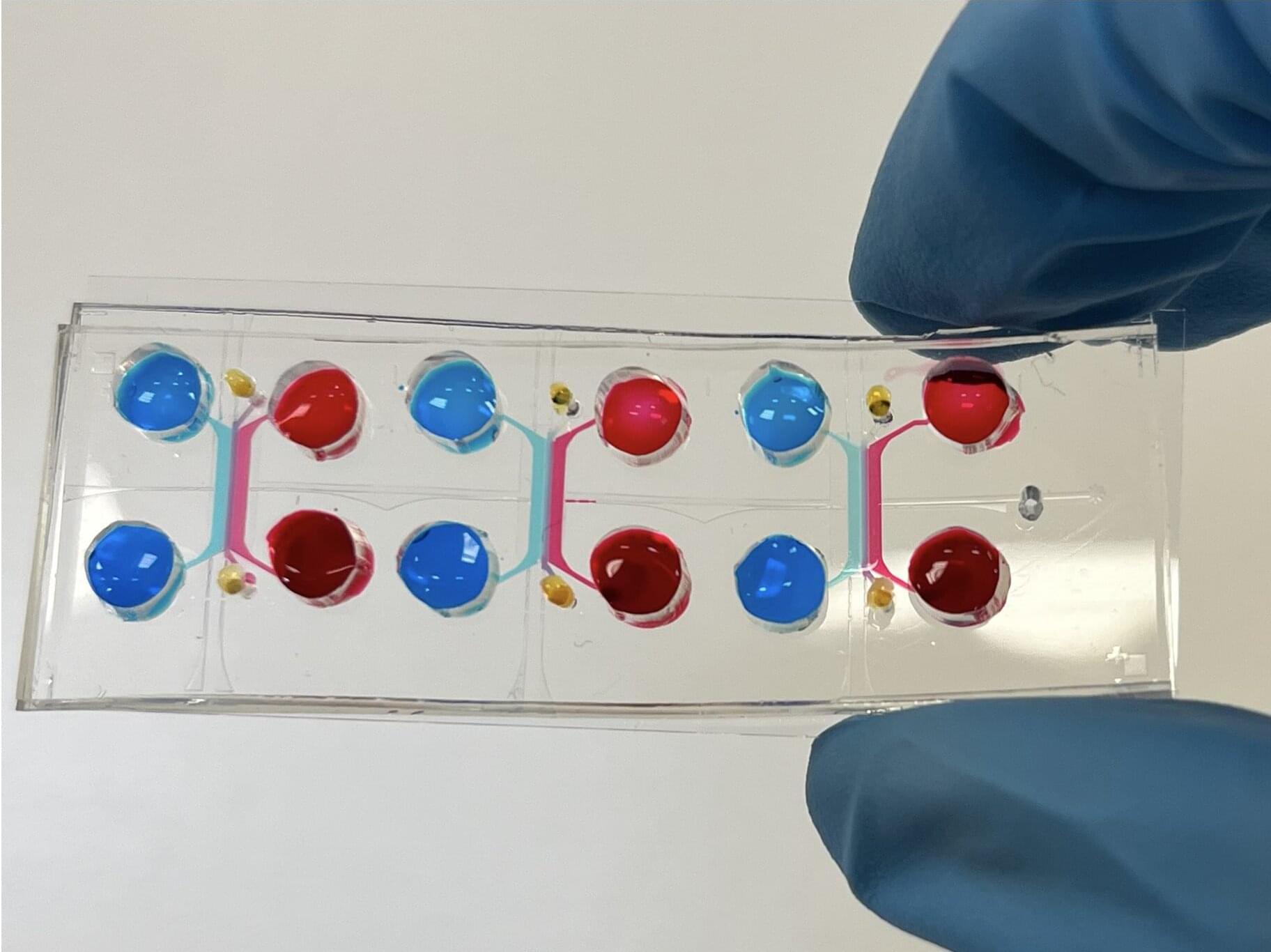

A team of researchers has developed a “gut-on-chip” (a miniature model of the human intestine on a chip-sized device) capable of reproducing the main features of intestinal inflammation and of predicting the response of melanoma patients to immunotherapy treatment. The results have just been published in Nature Biomedical Engineering.

The interaction between microbiota and immunotherapy has long been known. It is the result of both systemic effects, i.e., the immune response elicited in the entire body by immunotherapy, and local processes, especially in the gut, where most of the bacteria that populate our body live. However, the latter can only be studied in animal models, with all their limitations.

Indeed, there is no clinical reason to subject a patient receiving immunotherapy for melanoma to colonoscopy and colon biopsy. Yet intestinal inflammation is one of the main side effects of this treatment, often forcing the therapy to be discontinued.

To test this new system, the team executed what is known as Grover’s search algorithm—first described by Indian-American computer scientist Lov Grover in 1996. This search looks for a particular item in a large, unstructured dataset using superposition and entanglement in parallel. The search algorithm also exhibits a quadratic speedup, meaning a quantum computer can solve a problem with the square root of the input rather than just a linear increase. The authors report that the system achieved a 71 percent success rate.

While operating a successful distributed system is a big step forward for quantum computing, the team reiterates that the engineering challenges remain daunting. However, networking together quantum processors into a distributed network using quantum teleportation provides a small glimmer of light at the end of a long, dark quantum computing development tunnel.

“Scaling up quantum computers remains a formidable technical challenge that will likely require new physics insights as well as intensive engineering effort over the coming years,” David Lucas, principal investigator of the study from Oxford University, said in a press statement. “Our experiment demonstrates that network-distributed quantum information processing is feasible with current technology.”

From punch card-operated looms in the 1800s to modern cellphones, if an object has an “on” and an “off” state, it can be used to store information.

In a computer laptop, the binary ones and zeroes are transistors either running at low or high voltage. On a compact disc, the one is a spot where a tiny indented “pit” turns to a flat “land” or vice versa, while a zero is when there’s no change.

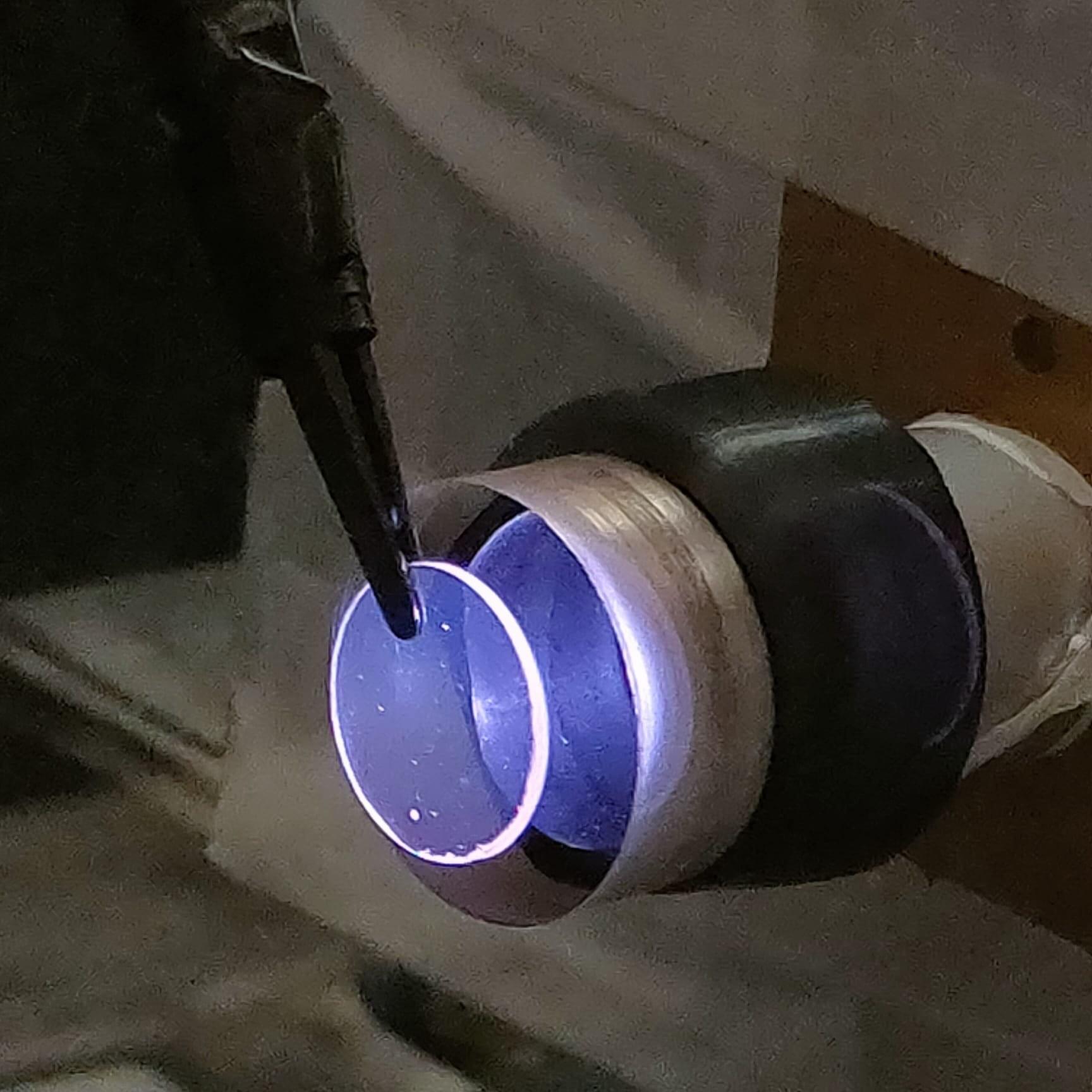

Historically, the size of the object making the “ones” and “zeroes” has put a limit on the size of the storage device. But now, University of Chicago Pritzker School of Molecular Engineering (UChicago PME) researchers have explored a technique to make ones and zeroes out of crystal defects, each the size of an individual atom for classical computer memory applications.

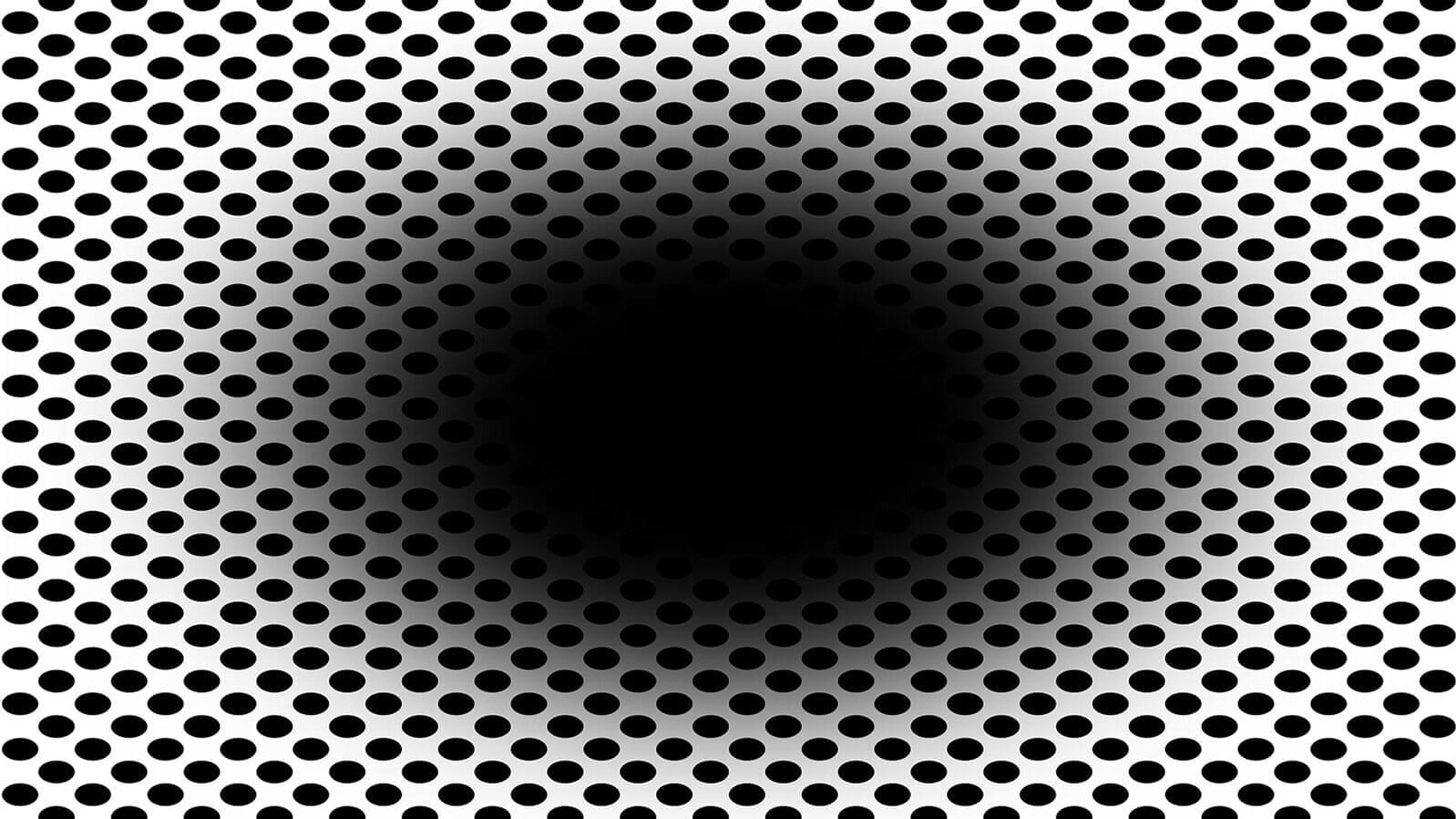

Our brain and eyes can play tricks on us—not least when it comes to the expanding hole illusion. A new computational model developed by Flinders University experts helps to explain how cells in the human retina make us “see” the dark central region of a black hole graphic expand outwards.

In a new article posted to the arXiv preprint server, the Flinders University experts highlight the role of the eye’s retinal ganglion cells in processing contrast and motion perception—and how messages from the cerebral cortex then give the beholder an impression of a moving or “expanding hole.”

“Visual illusions provide valuable insights into the mechanisms of human vision, revealing how the brain interprets complex stimuli,” says Dr. Nasim Nematzadeh, from the College of Science and Engineering at Flinders University.