The U.S. Department of Energy (DOE) has published a request for information from computer hardware and software vendors to assist in the planning, design, and commission of next-generation supercomputing systems.

The DOE request calls for computing systems in the 2025–2030 timeframe that are five to 10 times faster than those currently available and/or able to perform more complex applications in “data science, artificial intelligence, edge deployments at facilities, and science ecosystem problems, in addition to traditional modelling and simulation applications.”

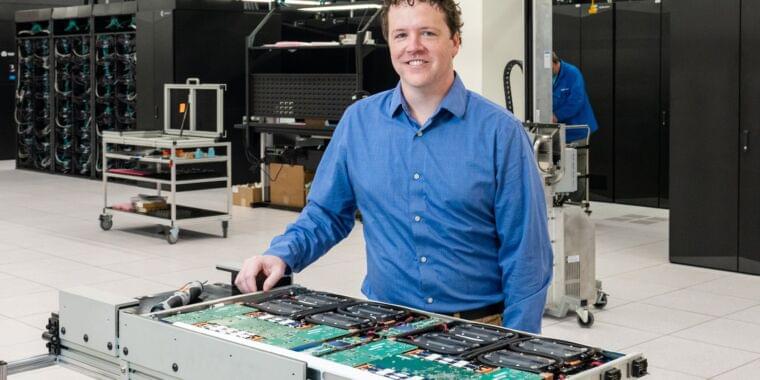

U.S. and Slovakia-based company Tachyum has now responded with its proposal for a 20 exaFLOP system. This would be based on Prodigy, its flagship product and described as the world’s first “universal” processor. According to Tachyum, the chip integrates 128 64-bit compute cores running at 5.7 GHz and combining the functionality of a CPU, GPU, and TPU into a single device with homogeneous architecture. This allows Prodigy to deliver performance at up to 4x that of the highest performing x86 processors (for cloud workloads) and 3x that of the highest performing GPU for HPC and 6x for AI applications.