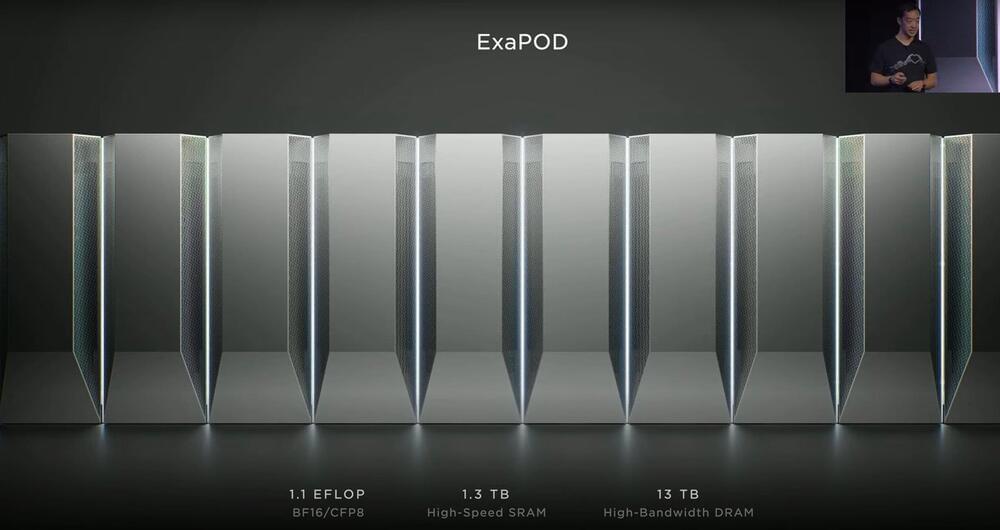

Today, we are living in the midst of a race to develop a quantum computer, one that could be used for practical applications. This device, built on the principles of quantum mechanics, holds the potential to perform computing tasks far beyond the capabilities of today’s fastest supercomputers. Quantum computers and other quantum-enabled technologies could foster significant advances in areas such as cybersecurity and molecular simulation, impacting and even revolutionizing fields such as online security, drug discovery and material fabrication.

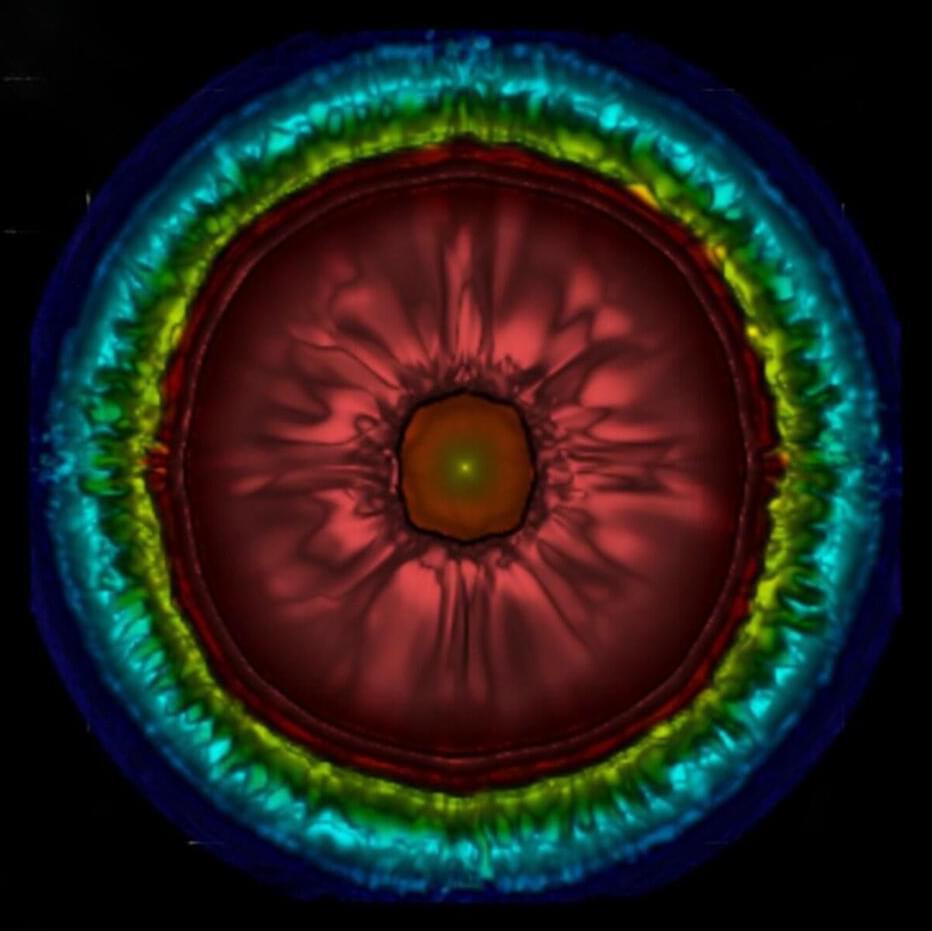

An offshoot of this technological race is building what is known in scientific and engineering circles as a “quantum simulator”—a special type of quantum computer, constructed to solve one equation model for a specific purpose beyond the computing power of a standard computer. For example, in medical research, a quantum simulator could theoretically be built to help scientists simulate a specific, complex molecular interaction for closer study, deepening scientific understanding and speeding up drug development.

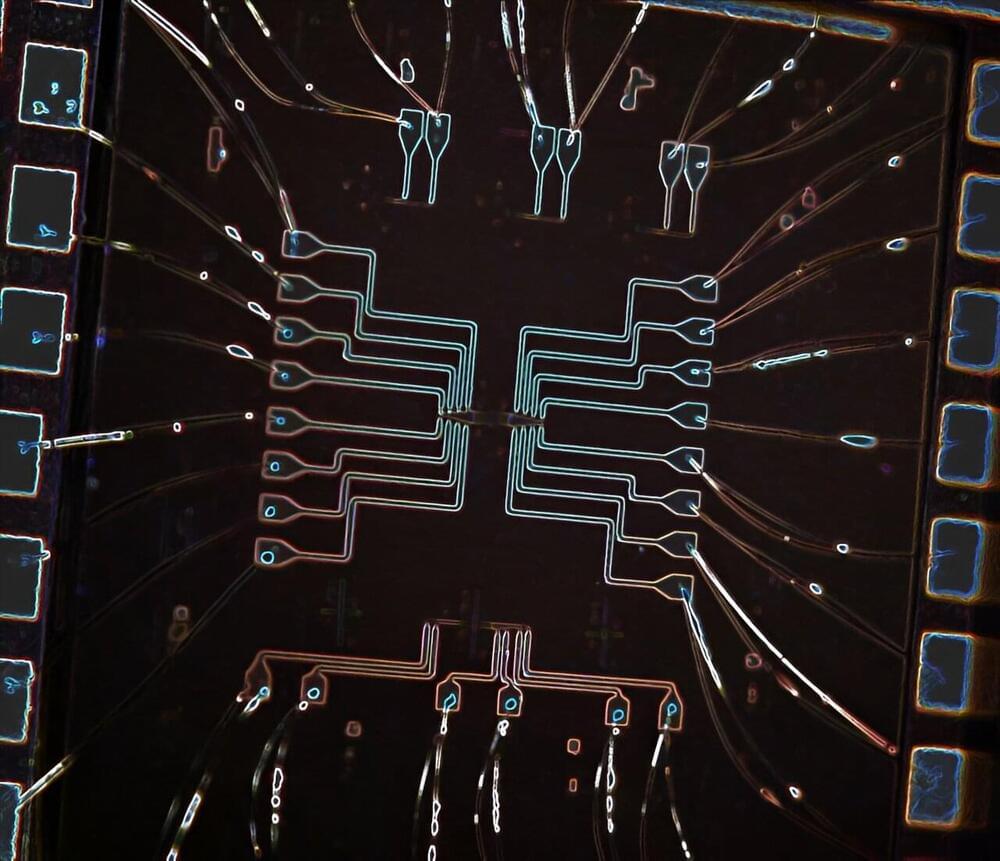

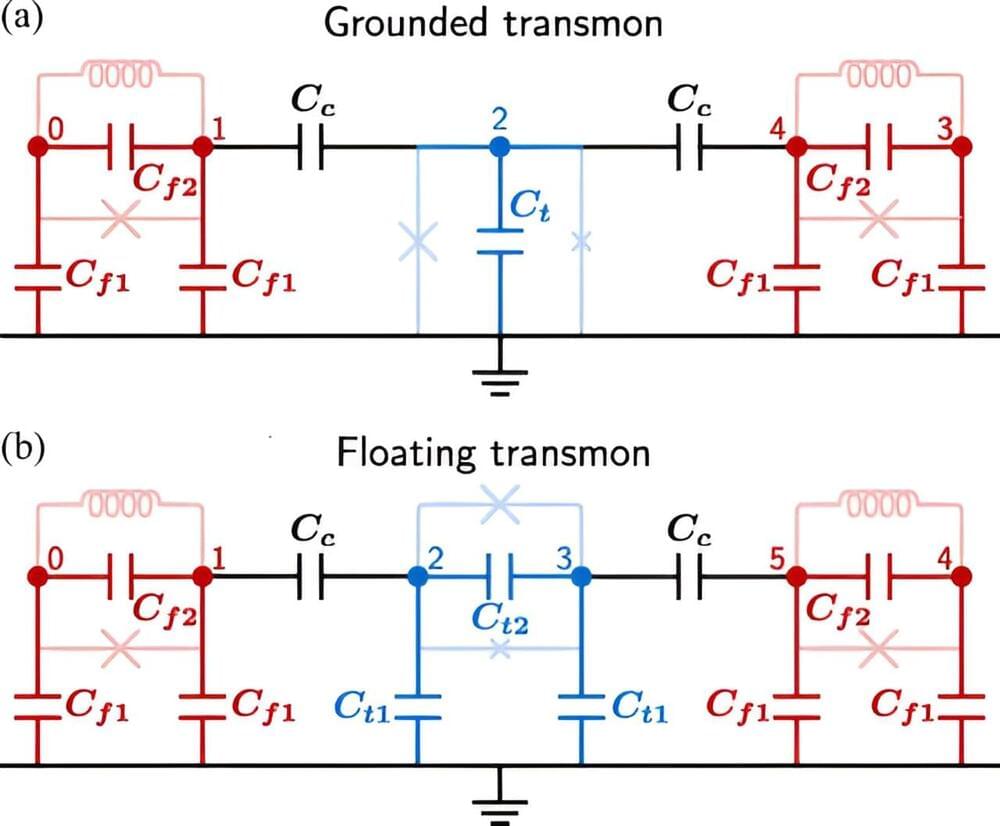

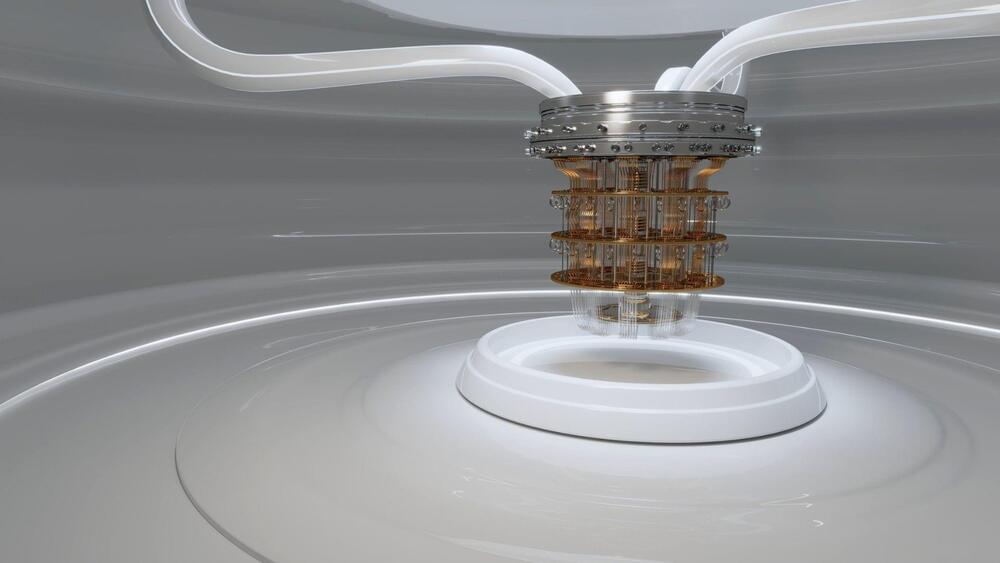

But just like building a practical, usable quantum computer, constructing a useful quantum simulator has proven to be a daunting challenge. The idea was first proposed by mathematician Yuri Manin in 1980. Since then, researchers have attempted to employ trapped ions, cold atoms and superconducting qubits to build a quantum simulator capable of real-world applications, but to date, these methods are all still a work in progress.