Our muscles are nature’s actuators. The sinewy tissue is what generates the forces that make our bodies move. In recent years, engineers have used real muscle tissue to actuate “biohybrid robots” made from both living tissue and synthetic parts. By pairing lab-grown muscles with synthetic skeletons, researchers are engineering a menagerie of muscle-powered crawlers, walkers, swimmers, and grippers.

But for the most part, these designs are limited in the amount of motion and power they can produce. Now, MIT engineers are aiming to give bio-bots a power lift with artificial tendons.

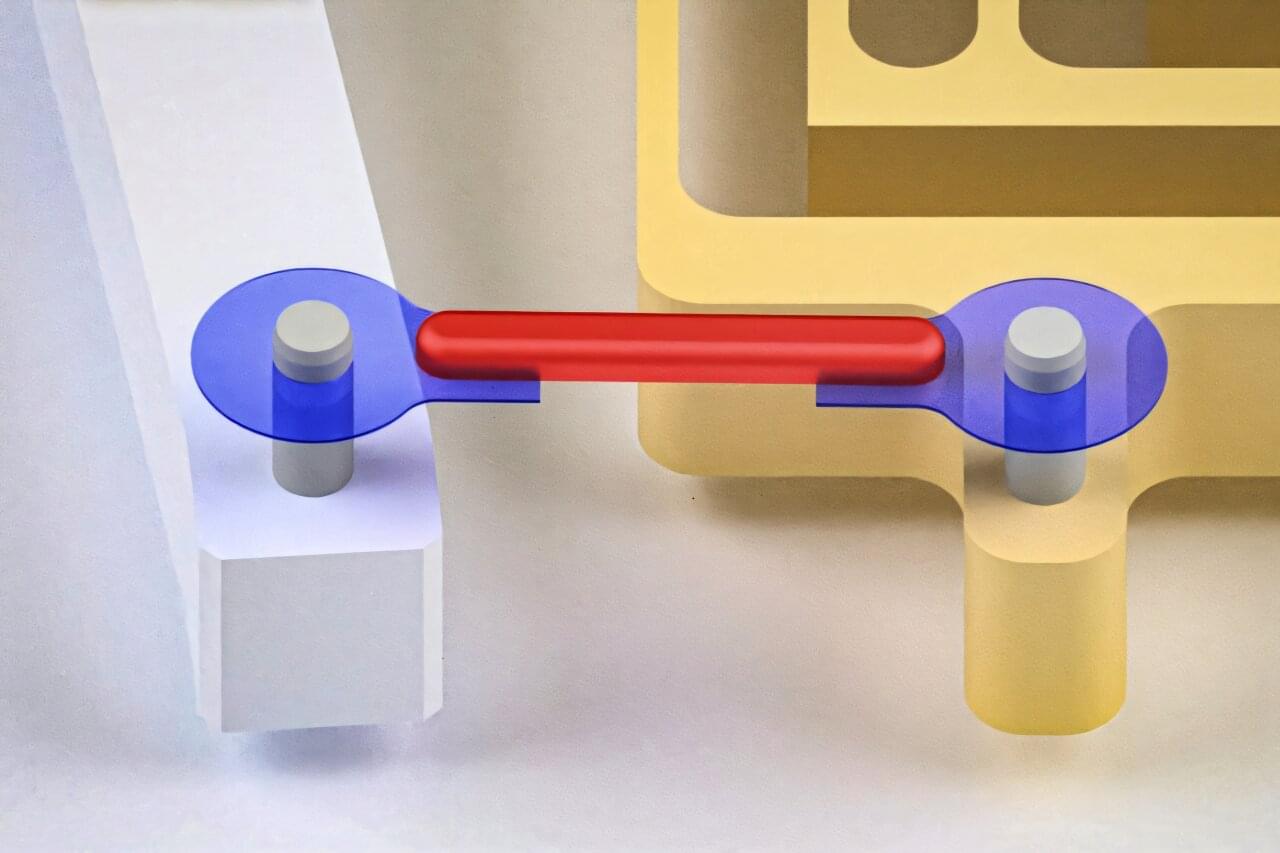

In a study published in the journal Advanced Science, the researchers developed artificial tendons made from tough and flexible hydrogel. They attached the rubber band-like tendons to either end of a small piece of lab-grown muscle, forming a “muscle-tendon unit.” Then they connected the ends of each artificial tendon to the fingers of a robotic gripper.