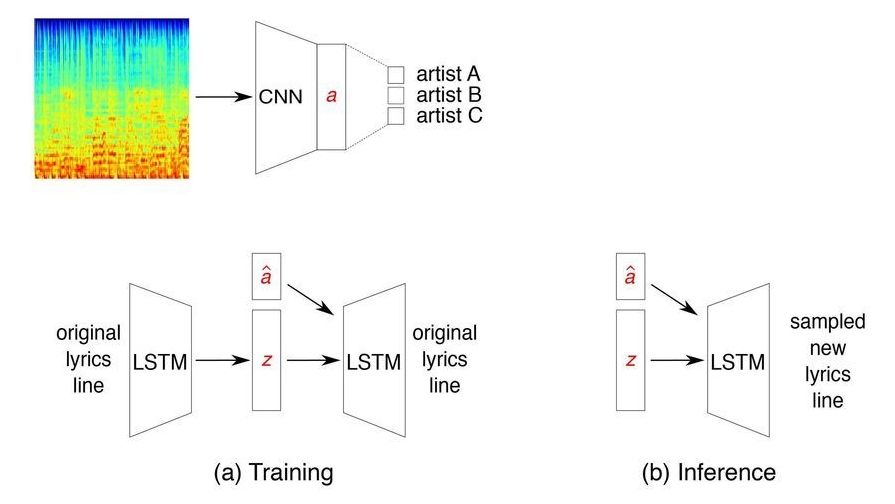

Researchers at the University of Waterloo, Canada, have recently developed a system for generating song lyrics that match the style of particular music artists. Their approach, outlined in a paper pre-published on arXiv, uses a variational autoencoder (VAE) with artist embeddings and a CNN classifier trained to predict artists from MEL spectrograms of their song clips.

“The motivation for this project came from my personal interest,” Olga Vechtomova, one of the researchers who carried out the study, told TechXplore. “Music is a passion of mine, and I was curious about whether a machine can generate lines that sound like the lyrics of my favourite music artists. While working on text generative models, my research group found that neural networks can generate some impressive lines of text. The natural next step for us was to explore whether a machine could learn the ‘essence’ of a specific music artist’s lyrical style, including choice of words, themes and sentence structure, to generate novel lyrics lines that sound like the artist in question.”

The system developed by Vechtomova and her colleagues is based on a neural network model called variational autoencoder (VAE), which can learn by reconstructing original lines of text. In their study, the researchers trained their model to generate any number of new, diverse and coherent lyric lines.