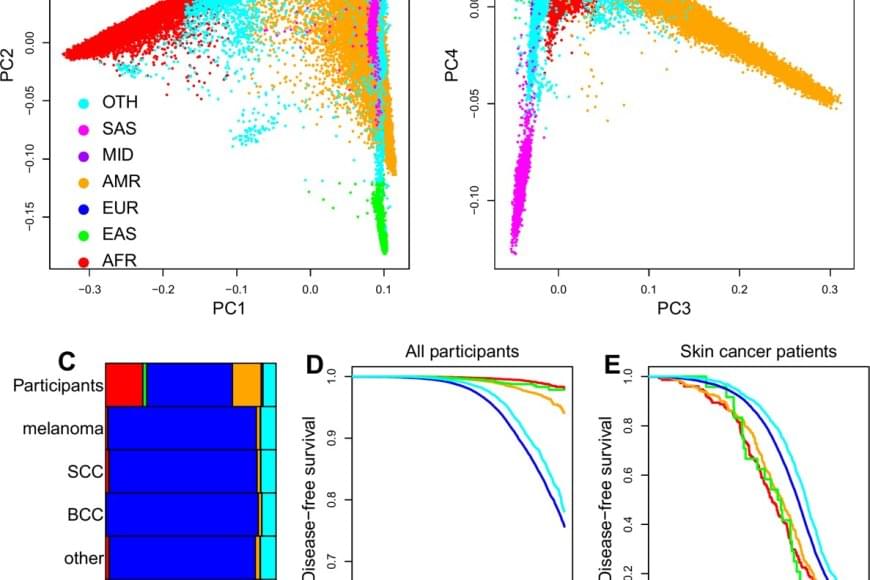

Key findings from the study include:

Researchers have developed a new approach for identifying individuals with skin cancer that combines genetic ancestry, lifestyle and social determinants of health using a machine learning model. Their model, more accurate than existing approaches, also helped the researchers better characterize disparities in skin cancer risk and outcomes.

Skin cancer is among the most common cancers in the United States, with more than 9,500 new cases diagnosed every day and approximately two deaths from skin cancer occurring every hour. One important component of reducing the burden of skin cancer is risk prediction, which utilizes technology and patient information to help doctors decide which individuals should be prioritized for cancer screening.

Traditional risk prediction tools, such as risk calculators based on family history, skin type and sun exposure, have historically performed best in people of European ancestry because they are more represented in the data used to develop these models. This leaves significant gaps in early detection for other populations, particularly those with darker skin, who are less likely to be of European ancestry. As a result, skin cancer in people of non-European ancestry is frequently diagnosed at later stages when it is more difficult to treat. As a consequence of later stage detection, people of non-European ancestry also tend to have worse overall outcomes from skin cancer.