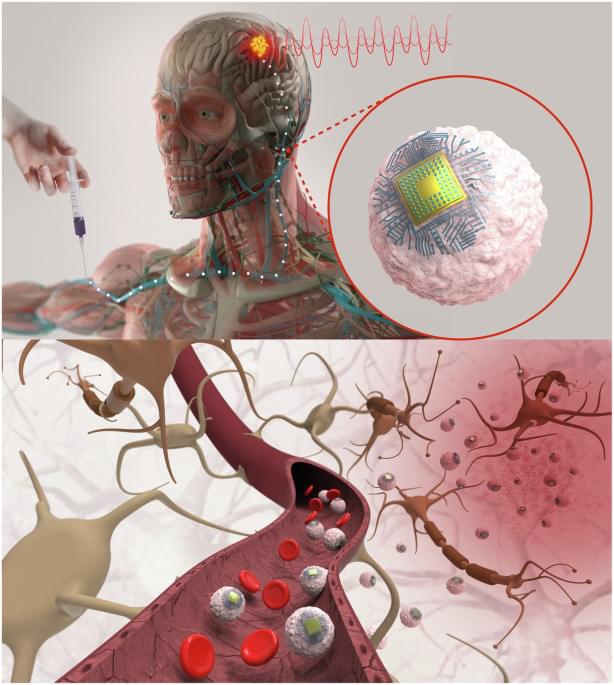

MIT researchers have taken a major step toward making this scenario a reality. They developed microscopic, wireless bioelectronics that could travel through the body’s circulatory system and autonomously self-implant in a target region of the brain, where they would provide focused treatment.

In a study on mice, the researchers show that after injection, these miniscule implants can identify and travel to a specific brain region without the need for human guidance. Once there, they can be wirelessly powered to provide electrical stimulation to the precise area. Such stimulation, known as neuromodulation, has shown promise as a way to treat brain tumors and diseases like Alzheimer’s and multiple sclerosis.

Moreover, because the electronic devices are integrated with living, biological cells before being injected, they are not attacked by the body’s immune system and can cross the blood-brain barrier while leaving it intact. This maintains the barrier’s crucial protection of the brain.

A nonsurgical brain implant enabled through a cell–electronics hybrid for focal neuromodulation.

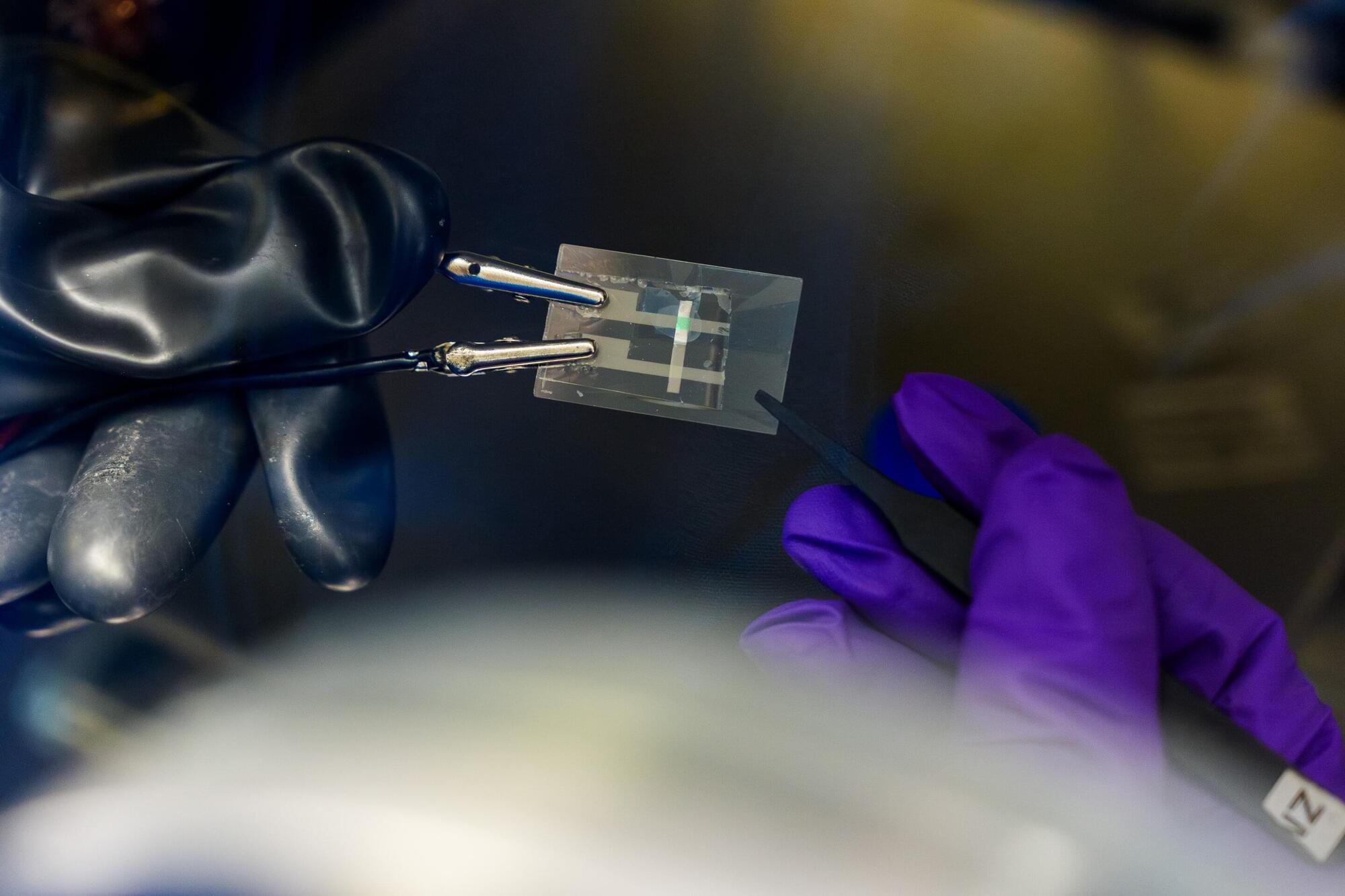

Photovoltaic devices attached to immune cells travel through the blood to inflamed brain regions.