A team of US researchers has unveiled a device that can conduct electricity along its fractionally charged edges without losing energy to heat. Described in Nature Physics, the work, led by Xiaodong Xu at the University of Washington, marks the first demonstration of a “dissipationless fractional Chern insulator,” a long-sought state of matter with promising implications for future quantum technologies.

The quantum Hall effect emerges when electrons are confined to a two-dimensional material, cooled to extremely low temperatures, and exposed to strong magnetic fields. Much like the classical Hall effect, it describes how a voltage develops across a material perpendicular to the direction of current flow. In this case, however, that voltage increases in discrete, or quantized steps.

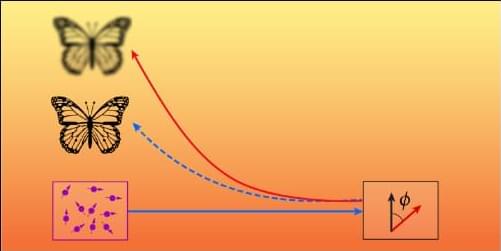

Under even more extreme conditions, an exotic variant appears named the “fractional quantum Hall” (FQH) effect. Here, electrons no longer behave as independent particles but move collectively, giving rise to voltage steps that correspond to fractions of an electron’s charge. This unusual collective behavior unlocks a whole host of exotic properties, and has made such states particularly appealing for emerging quantum technologies.