By encoding quantum information using topology, researchers have found a way to resist the noise that usually disrupts entangled states, potentially transforming the reliability of quantum tech.

Scientists have long sought to unravel the mysteries of strange metals—materials that defy conventional rules of electricity and magnetism. Now, a team of physicists at Rice University has made a breakthrough in this area using a tool from quantum information science. Their study, published recently in Nature Communications, reveals that electrons in strange metals become more entangled at a crucial tipping point, shedding new light on the behavior of these enigmatic materials. The discovery could pave the way for advances in superconductors with the potential to transform energy use in the future.

Unlike conventional metals such as copper or gold that have well-understood electrical properties, strange metals behave in much more complex ways, making their inner workings beyond the realm of textbook description. Led by Qimiao Si, the Harry C. and Olga K. Wiess Professor of Physics and Astronomy, the research team turned to quantum Fisher information (QFI), a concept from quantum metrology used to measure how electron interactions evolve under extreme conditions, to find answers. Their research shows that electron entanglement, a fundamental quantum phenomenon, peaks at a quantum critical point: the transition between two states of matter.

“Our findings reveal that strange metals exhibit a unique entanglement pattern, which offers a new lens to understand their exotic behavior,” Si said. “By leveraging quantum information theory, we are uncovering deep quantum correlations that were previously inaccessible.”

Our machines will be smart enough and eventually we will through intelligence enhancement.

For over a century, Einstein’s theories have been the bedrock of modern physics, shaping our understanding of the universe and reality itself. But what if everything we thought we knew was just the surface of a much deeper truth? In February 2025, at Google’s high-security Quantum A-I Campus in Santa Barbara, a team of scientists gathered around their latest creation — a quantum processor named Willow. What happened next would leave even Neil deGrasse Tyson, one of the world’s most renowned astrophysicists, in tears. This is the story of how a cutting-edge quantum chip opened a door that many thought would remain forever closed, challenging our most fundamental beliefs about the nature of reality. This is a story you do not want to miss.

Jacob Barandes, physicist and philosopher of science at Harvard University, talks about realism vs. anti-realism, Humeanism, primitivism, quantum physics, Hilbert spaces, quantum decoherence, measurement problem, Wigner’s Friend thought experiment, philosophy of physics, the quantum-stochastic correspondence and indivisible stochastic processes.

Jacob: https://www.jacobbarandes.com/

SUPPORT:

Patreon: / knowtime.

Anchor: https://anchor.fm/knowtime/support.

Youtube Membership: / @knowtime.

PODCAST:

Anchor: https://anchor.fm/knowtime.

Spotify: https://open.spotify.com/show/2CjRJPktODLDeHavCNDLGA

Apple Podcasts: https://podcasts.apple.com/us/podcast/know-time/id1535371851?uo=4

CONNECT:

Instagram: https://www.instagram.com/knowtimetofly/

Instagram (Personal): https://www.instagram.com/shalajlawania/

Twitter: https://twitter.com/knowtimetofly.

Twitter (Personal): https://twitter.com/shalajlawania.

Facebook: https://www.facebook.com/knowtimetofly.

Blog: http://www.sadisticshalpy.com/

Hosted & produced by: shalaj lawania.

Quantum computers have recently demonstrated an intriguing form of self-analysis: the ability to detect properties of their own quantum state—specifically, their entanglement— without collapsing the wave function (Entangled in self-discovery: Quantum computers analyze their own entanglement | ScienceDaily) (Quantum Computers Self-Analyze Entanglement With Novel Algorithm). In other words, a quantum system can perform a kind of introspection by measuring global entanglement nonlocally, preserving its coherent state. This development has been likened to a “journey of self-discovery” for quantum machines (Entangled in self-discovery: Quantum computers analyze their own entanglement | ScienceDaily), inviting comparisons to the self-monitoring and internal awareness associated with human consciousness.

How might a quantum system’s capacity for self-measurement relate to models of functional consciousness?

Key features of consciousness—like the integration of information from many parts, internal self-monitoring of states, and adaptive decision-making—find intriguing parallels in quantum phenomena like entanglement, superposition, and observer-dependent measurement.

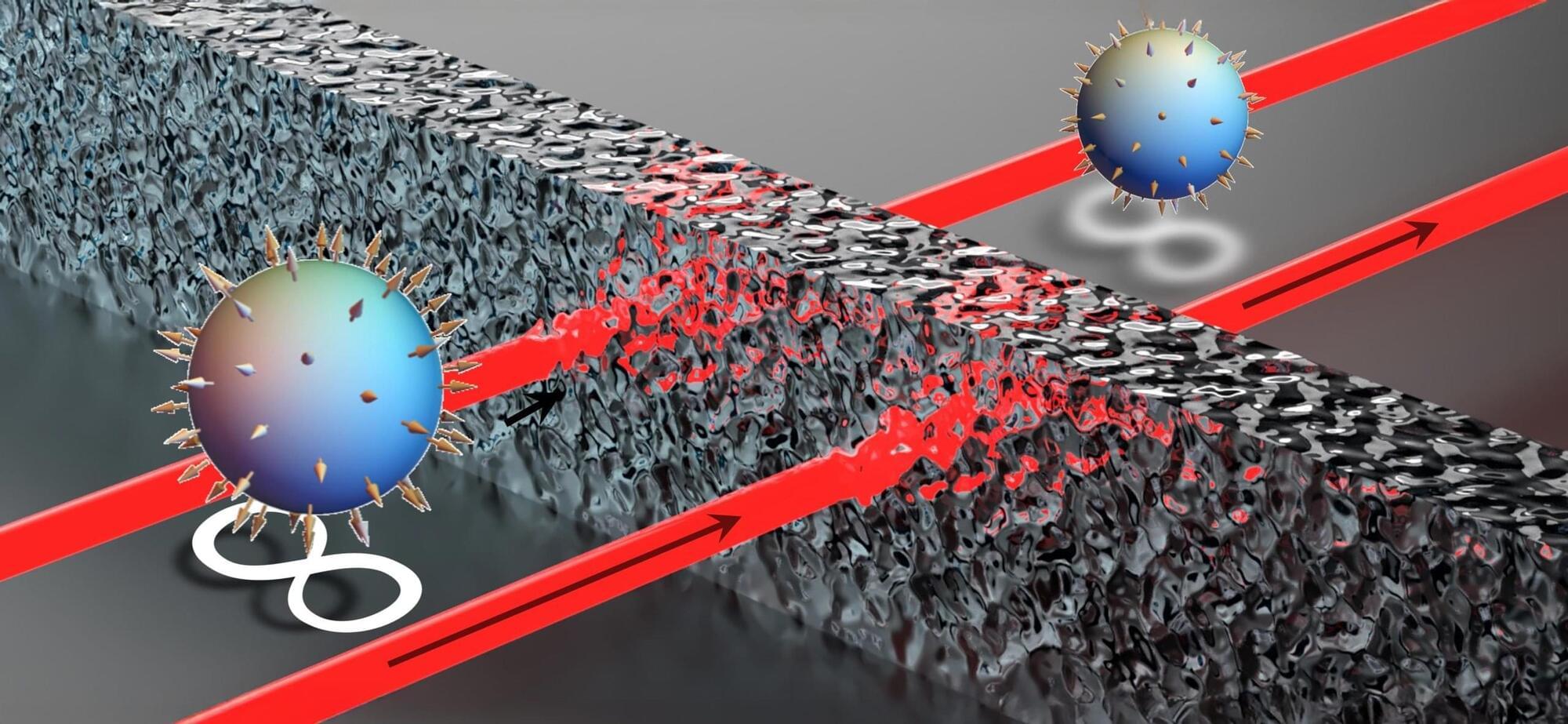

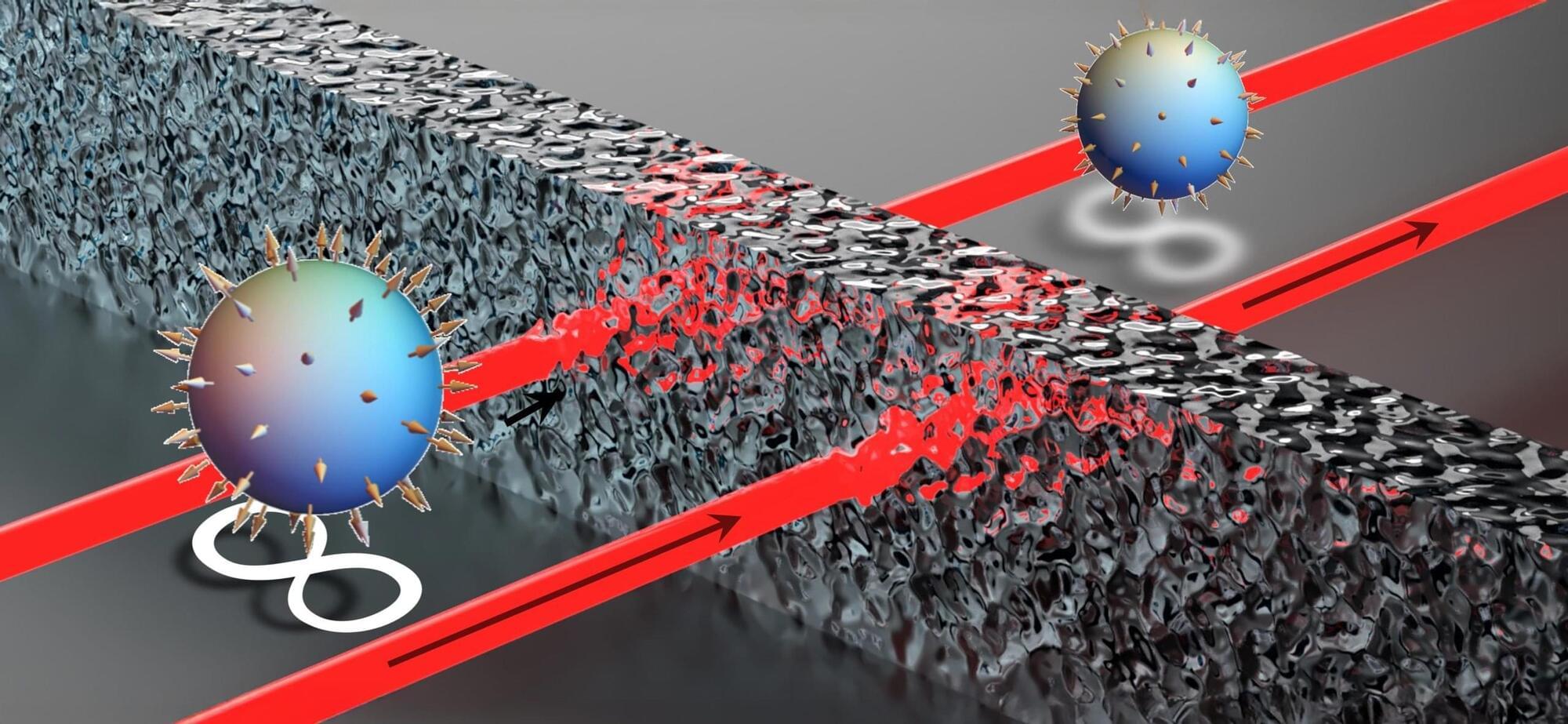

Superconductivity is a quantum phenomenon, observed in some materials, that entails the ability to conduct electricity with no resistance below a critical temperature. Over the past few years, physicists and material scientists have been trying to identify materials exhibiting this property (i.e., superconductors), while also gathering new insights about its underlying physical processes.

Superconductors can be broadly divided into two categories: conventional and unconventional superconductors. In conventional superconductors, electron pairs (i.e., Cooper pairs) form due to phonon-mediated interactions, resulting in a superconducting gap that follows an isotropic s-wave symmetry. On the other hand, in unconventional superconductors, this gap can present nodes (i.e., points at which the superconducting gap vanishes), producing a d-wave or multi-gap symmetry.

Researchers at the University of Tokyo recently carried out a study aimed at better understanding the unconventional superconductivity previously observed in a rare-earth intermetallic compound, called PrTi2Al20, which is known to arise from a multipolar-ordered state. Their findings, published in Nature Communications, suggest that there is a connection between quadrupolar interactions and superconductivity in this material.

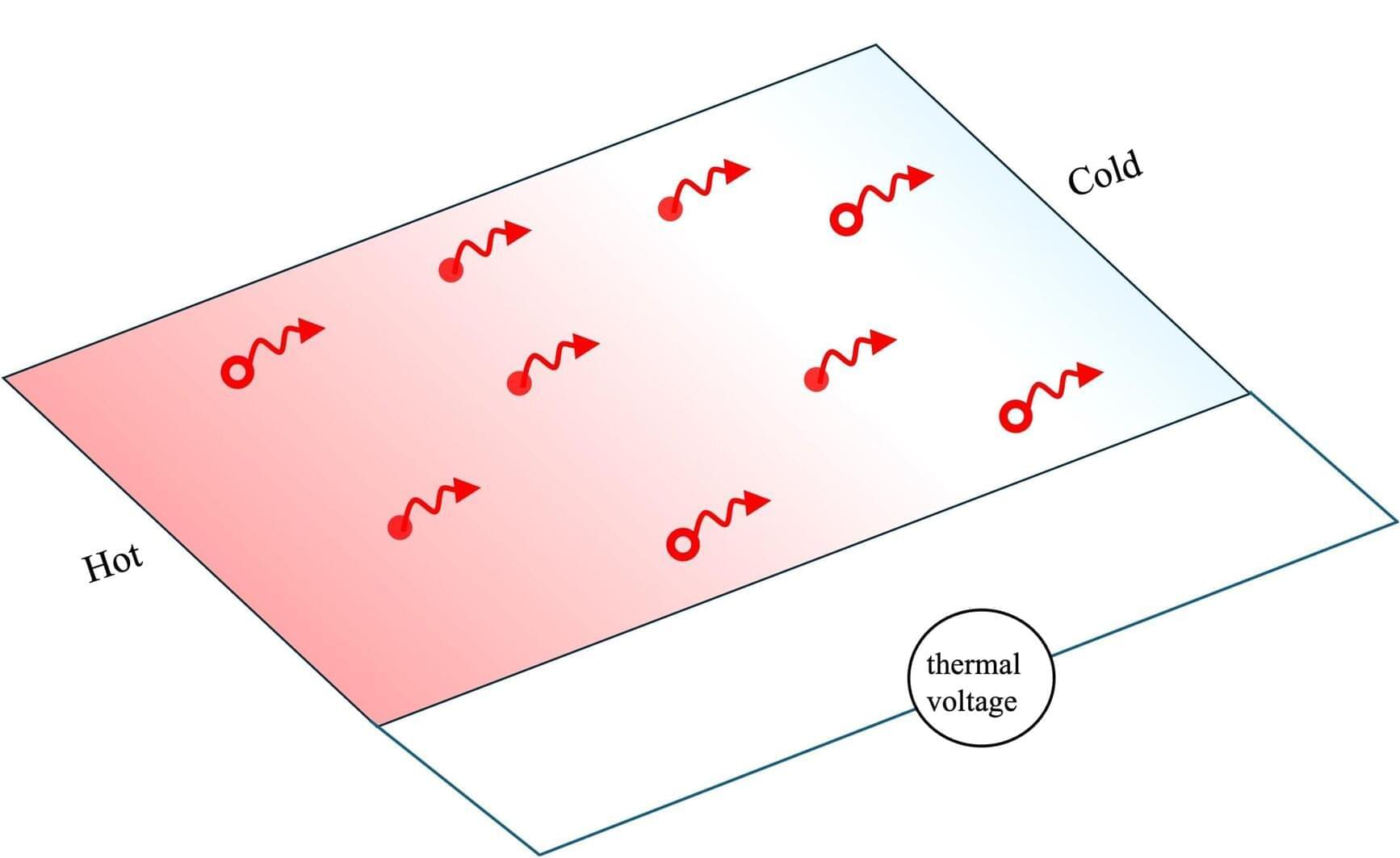

If one side of a conducting or semiconducting material is heated while the other remains cool, charge carriers move from the hot side to the cold side, generating an electrical voltage known as thermopower.

Past studies have shown that the thermopower produced in clean two-dimensional (2D) electron systems (i.e., materials with few impurities in which electrons can only move in 2D), is directly proportional to the entropy (i.e., the degree of randomness) per charge carrier.

The link between thermopower and entropy could be leveraged to probe exotic quantum phases of matter. One of these phases is the fractional quantum Hall (FQH) effect, which is known to arise when electrons in these materials are subject to a strong perpendicular magnetic field at very low temperatures.

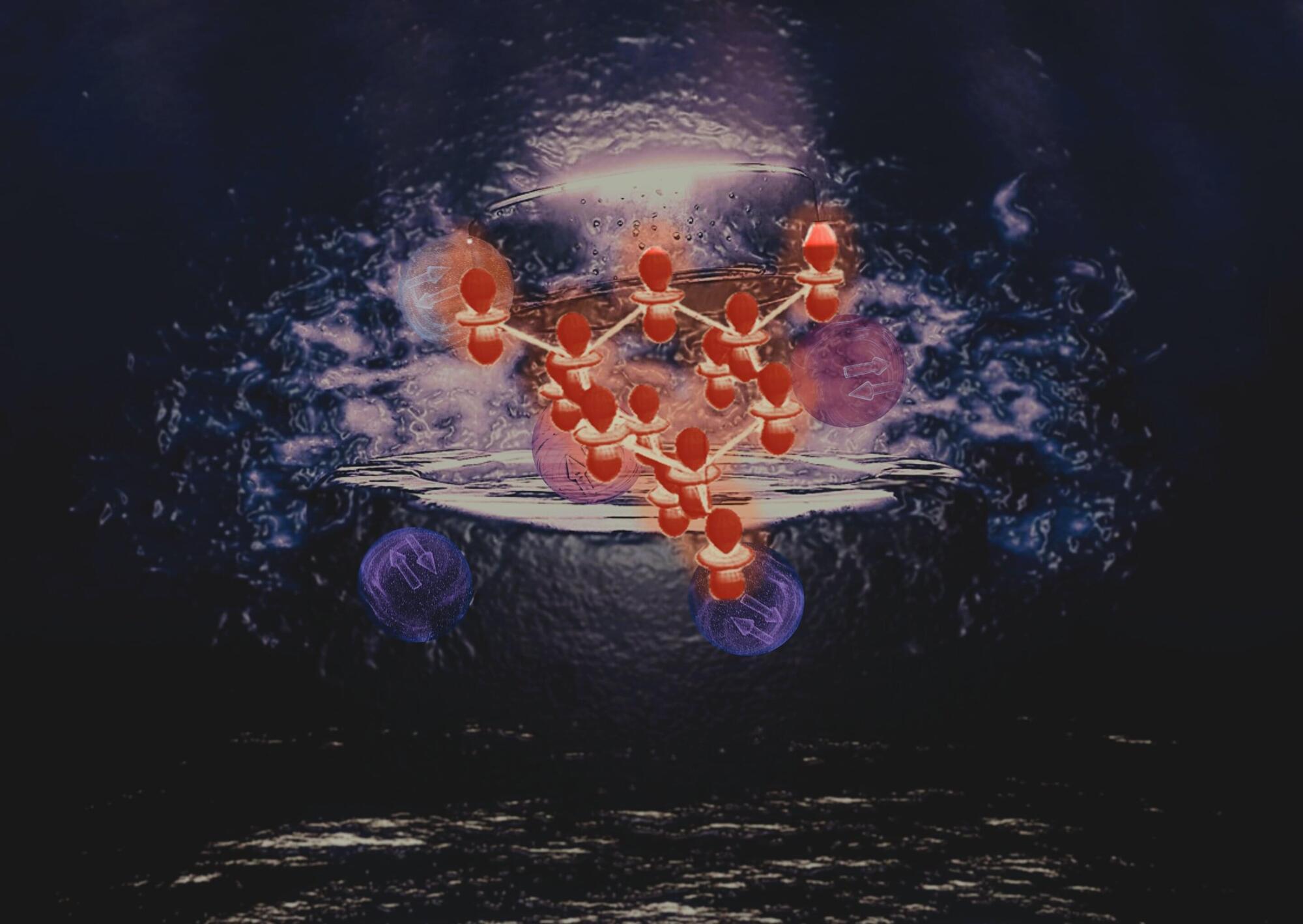

This Quantum Computer Simulates the Hidden Forces That Shape Our Universe

The study of elementary particles and forces is of central importance to our understanding of the universe. Now a team of physicists from the University of Innsbruck and the Institute for Quantum Computing (IQC) at the University of Waterloo show how an unconventional type of quantum computer opens a new door to the world of elementary particles.

Credit: Kindea Labs