The physicists at the University of Bonn have experimentally demonstrated that a crucial theorem in statistical physics is applicable to Bose-Einstein condensates. This discovery enables the measurement of specific properties of these quantum “superparticles,” providing a means of deducing system characteristics that would otherwise be challenging to observe. The findings of this study have been published in the journal Physical Review Letters.

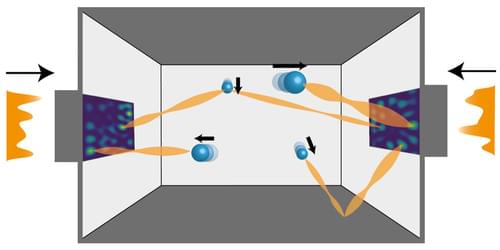

Suppose in front of you there is a container filled with an unknown liquid. Your goal is to find out by how much the particles in it (atoms or molecules) move back and forth randomly due to their thermal energy. However, you do not have a microscope with which you could visualize these position fluctuations known as “Brownian motion”.

It turns out you do not need that at all: You can also simply tie an object to a string and pull it through the liquid. The more force you have to apply, the more viscous your liquid. And the more viscous it is, the lesser the particles in the liquid change their position on average. The viscosity at a given temperature can therefore be used to predict the extent of the fluctuations.