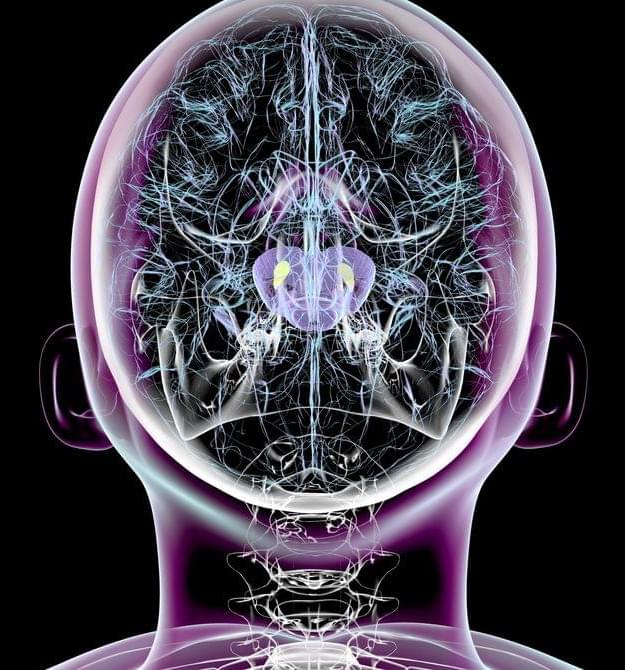

Shading brings 3D forms to life, beautifully carving out the shape of objects around us. Despite the importance of shading for perception, scientists have long been puzzled about how the brain actually uses it. Researchers from Justus-Liebig-University Giessen and Yale University recently came out with a surprising answer.

Previously, it has been assumed that one interprets shading like a physics-machine, somehow “reverse-engineering” the combination of shape and lighting that would recreate the shading we see. Not only is this extremely challenging for advanced computers, but the visual brain is not designed to solve that sort of problem. So, these researchers decided to start instead by considering what is known about the brain when it first gets signals from the eye.

“In some of the first steps of visual processing, the brain passes the image through a series of ‘edge-detectors,’ essentially tracing it like an etch-a-sketch,” Professor Roland W. Fleming of Giessen explains. “We wondered what shading patterns would look like to a brain that’s searching for lines.” This insight led to an unexpected, but clever short-cut to the shading inference problem.