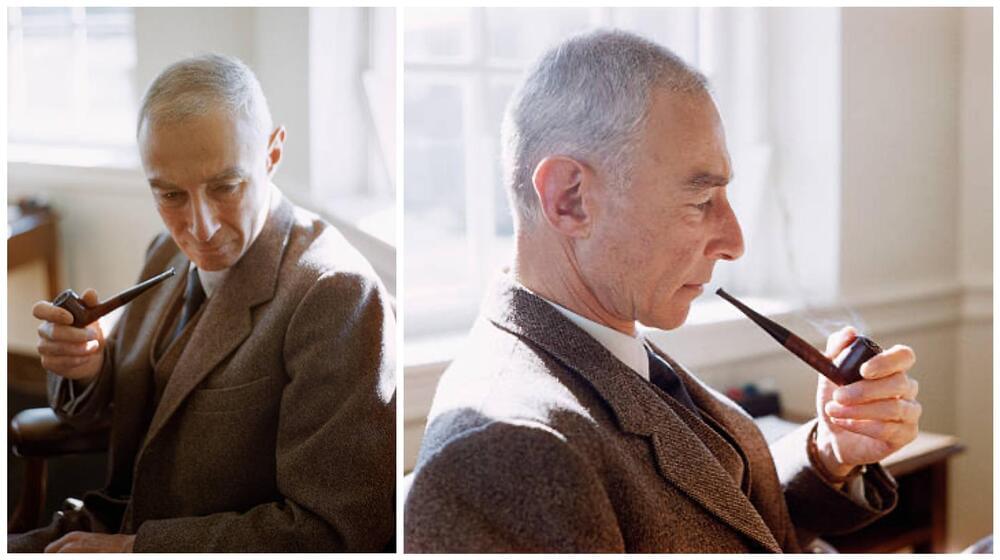

The so-called “Father of the Atomic Bomb” J. Robert Oppenheimer was once described as “a genius of the nuclear age and also the walking, talking conscience of science and civilization”. Born at the outset of the 20th century, his early interests in chemistry and physics would in the 1920s bring him to Göttingen University, where he worked alongside his doctoral supervisor Max Born (1882−1970), close lifelong friend Paul Dirac (1902−84) and eventual adversary Werner Heisenberg (1901−76). This despite the fact that even as early as in his youth, Oppenheimer was singled out as both gifted and odd, at times even unstable. As a child he collected rocks, wrote poetry and studied French literature. Never weighing more than 130 pounds, throughout his life he was a “tall and thin chainsmoker” who once stated that he “needed physics more than friends” who at Cambridge University was nearly charged with attempted murder after leaving a poisoned apple on the desk of one of his tutors. Notoriously abrupt and impatient, at Göttingen his classmates once gave their professor Born an ultimatum: “either the ‘child prodigy’ is reigned in, or his fellow students will boycott the class”. Following the successful defense of his doctoral dissertation, the professor administering the examination, Nobel Laureate James Franck (1882−1964) reportedly left the room stating.

“I’m glad that’s over. He was at the point of questioning me”

From his time as student at Harvard, to becoming a postgraduate researcher in Cambridge and Göttingen, a professor at UC Berkeley, the scientific head of the Manhattan project and after the war, the Director of the Institute for Advanced Study, wherever Oppenheimer went he could hold his own with the greatest minds of his age. Max Born, Paul Dirac, John von Neumann, Niels Bohr, Albert Einstein, Kurt Gödel, Richard Feynman, they all admired “Oppie”. When he died in 1967, his published articles in physics totaled 73, ranging from topics in quantum field theory, particle physics, the theory of cosmic radiations to nuclear physics and cosmology. His funeral was attended by over 600 people, and included numerous associates from academia and research as well as government officials, heads of military, even the director of the New York City Ballet.