Since Nick Bostrom wrote Superintelligence, AI has surged from theoretical speculation to powerful, world-shaping reality. Progress is undeniable, yet there is an ongoing debate in the AI safety community – caught between mathematical rigor and swiss-cheese security. P(doom) debates rage on, but equally concerning is the risk of locking in negative-value futures for a very long time.

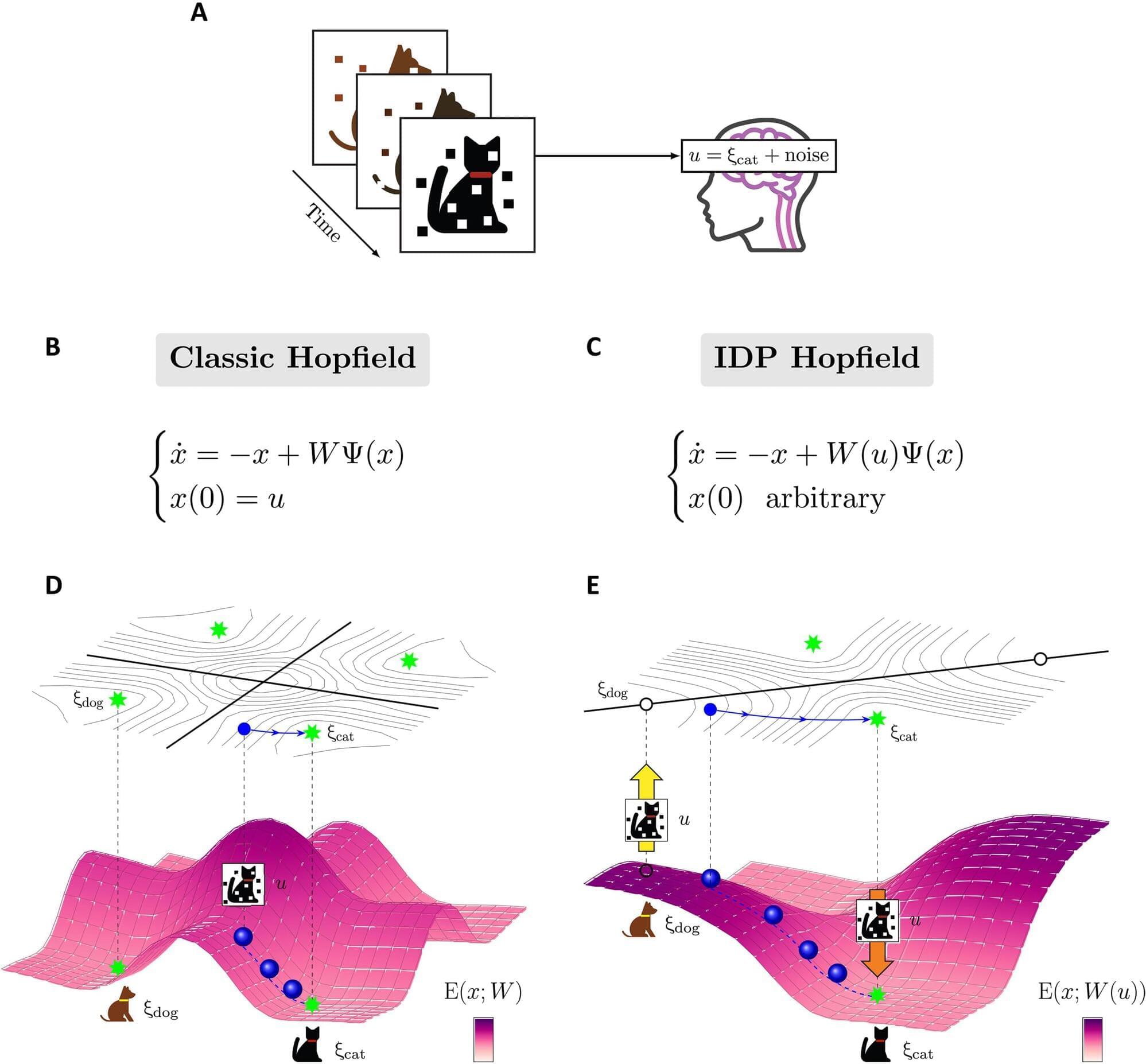

Zooming in: motivation selection-especially indirect normativity-raises the question: is there a structured landscape of possible value configurations, or just a chaotic search for alignment?

From Superintelligence to Deep Utopia: not just avoiding catastrophe but ensuring resilience, meaning, and flourishing in a’solved’ world; a post instrumental, plastic utopia – where humans are ‘deeply redundant’, can we find enduring meaning and purpose?

This is our moment to shape the future. What values will we encode? What futures will we entrench?

0:00 Highlights.

3:07 Intro.

4:15 Interview.

P.s. the background music at the start of the video is ’ Eta Carinae ’ which I created on a Korg Minilogue XD: https://scifuture.bandcamp.com/track/.… music at the end is ‘Hedonium 1′ which is guitar saturated with Strymon reverbs, delays and modulation: / hedonium-1 Many thanks for tuning in! Please support SciFuture by subscribing and sharing! Buy me a coffee? https://buymeacoffee.com/tech101z Have any ideas about people to interview? Want to be notified about future events? Any comments about the STF series? Please fill out this form: https://docs.google.com/forms/d/1mr9P… Kind regards, Adam Ford

- Science, Technology & the Future — #SciFuture — http://scifuture.org