I found this on NewsBreak: Unraveling Dark Energy and Cosmic Expansion With an 11-Ton Time Machine.

Fraunhofer researchers developed an easy-to-operate, unmanned watercraft that autonomously surveys bodies of water both above and below the surface and produces corresponding 3D maps.

The unmanned watercraft uses its GPS, acceleration and angular rate sensors, and a Doppler velocity log (DVL) sensor to incrementally feel its way along the bottom of the body of water. In combination with mapping software, laser scanners, and cameras enable the device to reconstruct high-precision 3D models of the surroundings above water. A multi-beam sonar integrated into the sensor system is used for underwater mapping and creating a complete 3D model of the bed.

“Our navigation system is semi-automatic in that the user only needs to specify the area to be mapped. The surveying process itself is fully automatic, and data evaluation is carried out with just a few clicks of the mouse. We developed the software modules required for the mapping and autonomous piloting,” explains Dr. Janko Petereit, a scientist at Fraunhofer IOSB.

During the journey, it autonomously avoids obstacles detected by the laser scanner and sonar and generates a 3D model in real time for navigation purposes, including dynamic objects such as moving vessels.

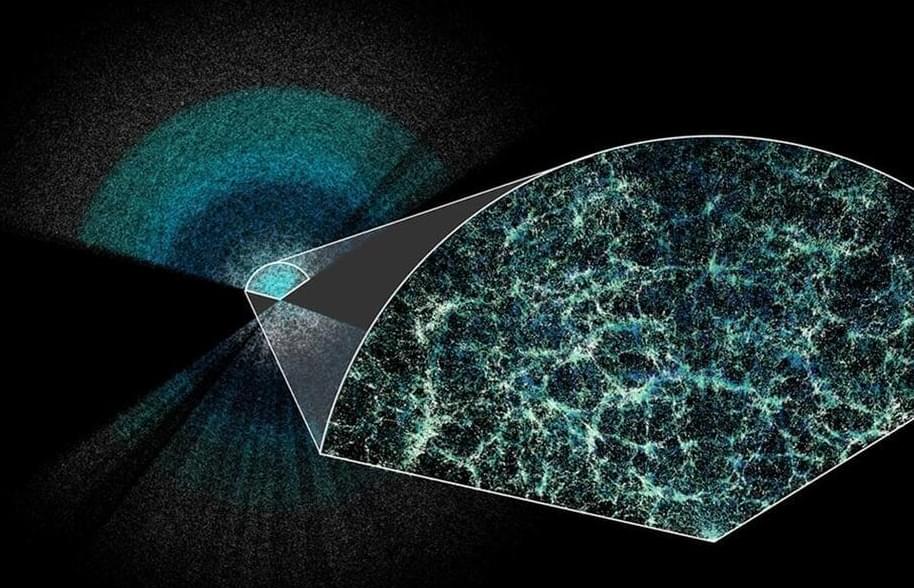

A non-radical proximity labelling platform — BAP-seq — is presented that uses subcellular-localized BS2 esterase to convert unreactive enol-based probes into highly reactive acid chlorides in situ to label nearby RNAs. When paired with click-handle-mediated enrichment and sequencing, this chemistry enables high-resolution spatial mapping of RNAs across subcellular compartments.

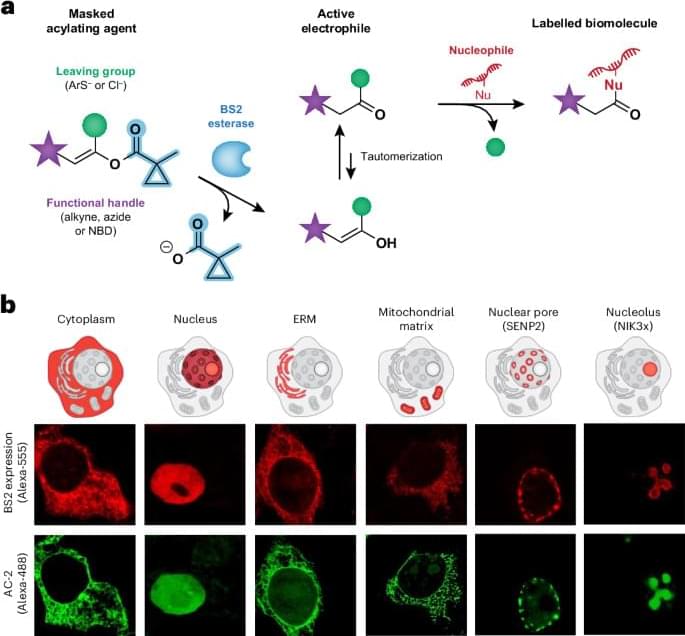

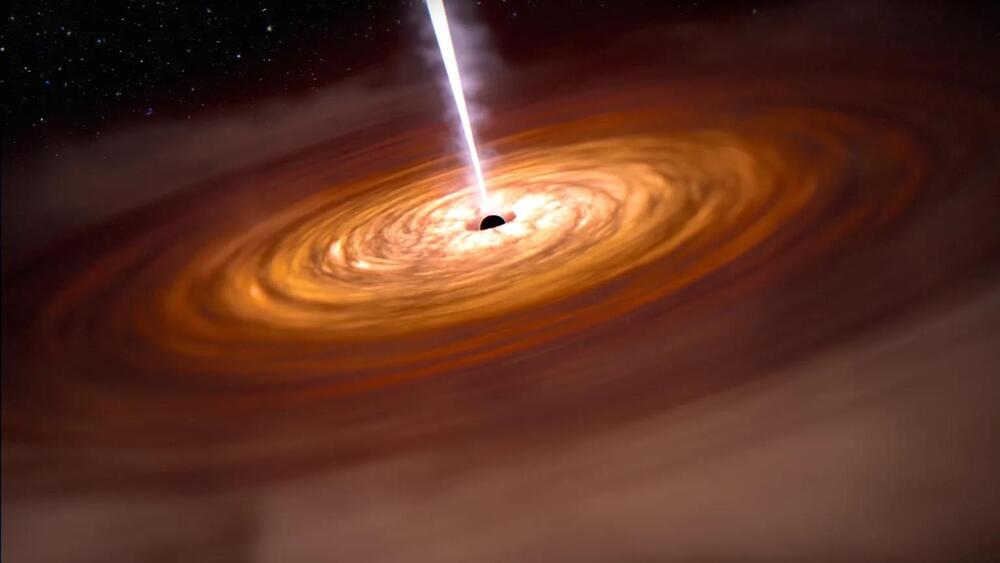

Ever wonder where all the active supermassive black holes are in the universe? Now, with the largest quasar catalog yet, you can see the locations of 1.3 million quasars in 3D.

The catalog, Quaia, can be accessed here.

“This quasar catalog is a great example of how productive astronomical projects are,” says David Hogg, study co-author and computational astrophysicist at the Flatiron Institute, in a press release. “Gaia was designed to measure stars in our galaxy, but it also found millions of quasars at the same time, which give us a map of the entire universe.” By mapping and seeing where quasars are across the universe, astrophysicists can learn more about how the universe evolved, insights into how supermassive black holes grow, and even how dark matter clumps together around galaxies. Researchers published the study this week in The Astrophysical Journal.

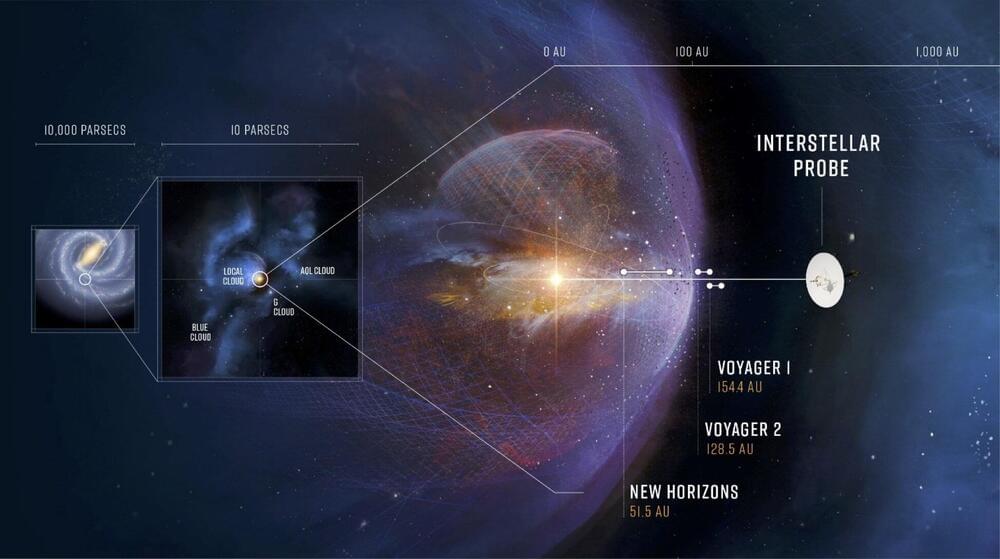

The heliosphere—made of solar wind, solar transients, and the interplanetary magnetic field—acts as our solar system’s personal shield, protecting the planets from galactic cosmic rays. These extremely energetic particles accelerated outwards from events like supernovas and would cause a huge amount of damage if the heliosphere did not mostly absorb them.

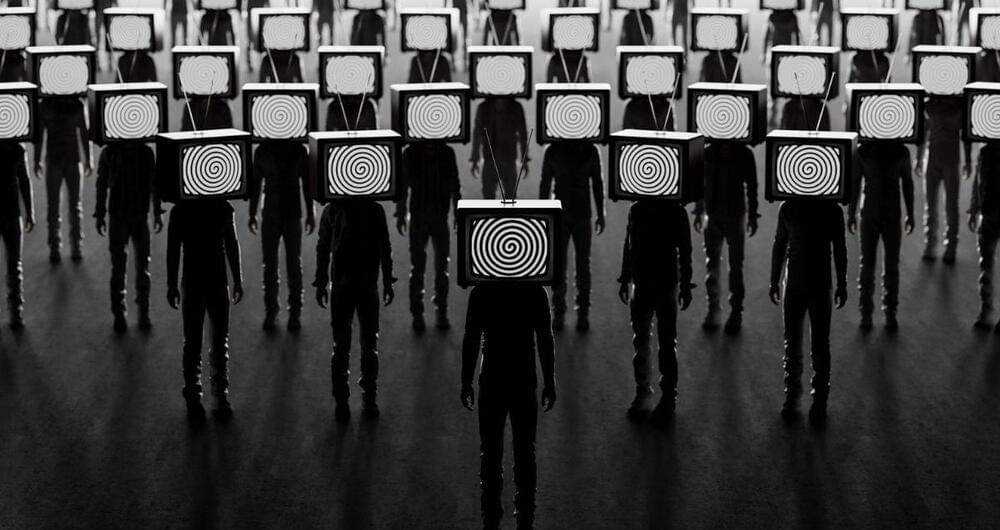

This article includes computer-generated images that map internet communities by topic, without specifically naming each one. The research was funded by the US government, which is anticipating massive interference in the 2024 elections by “bad actors” using relatively simple AI chat-bots.

In an era of super-accelerated technological advancement, the specter of malevolent artificial intelligence (AI) looms large. While AI holds promise for transforming industries and enhancing human life, the potential for abuse poses significant societal risks. Threats include avalanches of misinformation, deepfake videos, voice mimicry, sophisticated phishing scams, inflammatory ethnic and religious rhetoric, and autonomous weapons that make life-and-death decisions without human intervention.

During this election year in the United States, some are worried that bad actor AI will sway the outcomes of hotly contested races. We spoke with Neil Johnson, a professor of physics at George Washington University, about his research that maps out where AI threats originate and how to help keep ourselves safe.

A new gravimeter is compact and stable and can detect the daily solar and lunar gravitational oscillations that are responsible for the tides.

Gravity measurements can help with searches for oil and gas or with predictions of impending volcanic activity. Unfortunately, today’s gravimeters are bulky, lack stability, or require extreme cooling. Now researchers have demonstrated a design for a small, highly sensitive gravimeter that operates stably at room temperature [1]. The device uses a small, levitated magnet whose equilibrium height is a sensitive probe of the local gravitational field. The researchers expect the design to be useful in field studies, such as the mapping of the distribution of underground materials.

Several obstacles have impeded the development of compact gravimeters, says Pu Huang of Nanjing University in China. Room-temperature devices generally use small mechanical oscillators, which offer excellent accuracy. However, they are made from materials that exhibit aging effects, so these gravimeters can lose accuracy over time. Much higher stability can be achieved with superconducting devices, but these require cryogenic conditions and so consume lots of power and are hard to use outdoors.

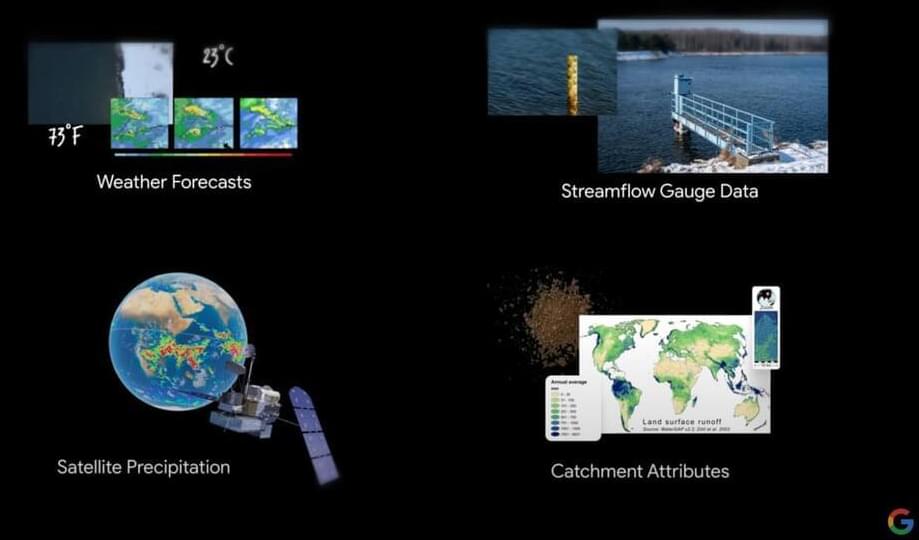

Google just announced that it has been riverline floods, up to seven days in advance in some cases. This isn’t just tech company hyperbole, as the findings were actually published Nature. Floods are the most common natural disaster throughout the world, so any early warning system is good news.

Floods have been notoriously tricky to predict, as most rivers don’t have streamflow gauges. Google got around this problem by with all kinds of relevant data, including historical events, river level readings, elevation and terrain readings and more. After that, the company generated localized maps and ran “hundreds of thousands” of simulations in each location. This combination of techniques allowed the models to accurately predict upcoming floods.

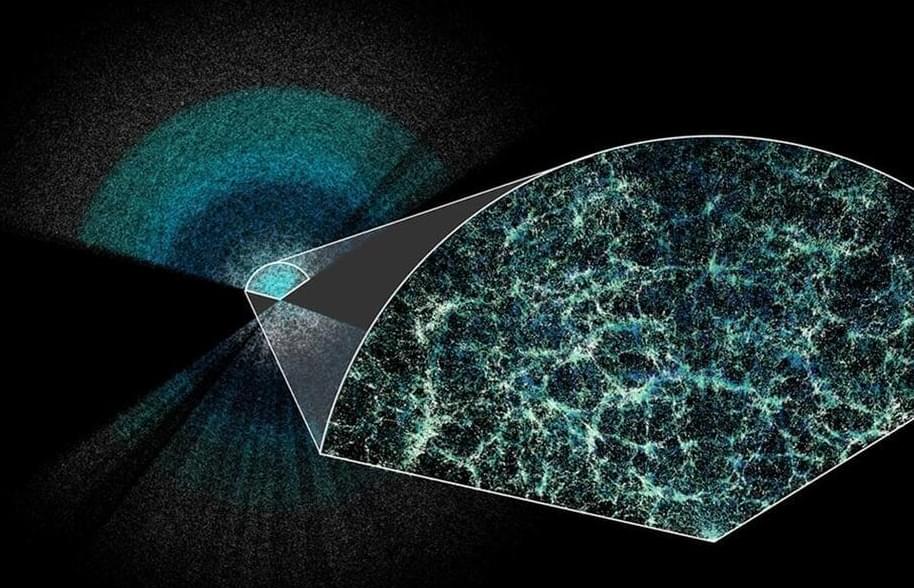

Astronomers have created the largest yet cosmic 3D map of quasars: bright and active centres of galaxies powered by supermassive black holes. This map shows the location of about 1.3 million quasars in space and time, with the furthest shining bright when the Universe was only 1.5 billion years old.

The new map has been made with data from ESA’s Gaia space telescope. While Gaia’s main objective is to map the stars in our own galaxy, in the process of scanning the sky it also spots objects outside the Milky Way, such as quasars and other galaxies.

The graphic representation of the map (bottom right on the infographic) shows us the location of quasars from our vantage point, the centre of the sphere. The regions empty of quasars are where the disc of our galaxy blocks our view.

Elon Musk’s SpaceX is teaming up with Larry Ellison’s Oracle to help farms plan and predict their agricultural output using an AI tool.

Larry Ellison said on Oracle’s earnings call on Monday that it’s collaborating with Musk and SpaceX to create the AI-powered mapping application for governments. The tool creates a map of a country’s farms and shows what each of them is growing.

The Oracle executive chairman said the tool could help farms assess the steps needed to increase their output, and whether fields had enough water and nitrogen.