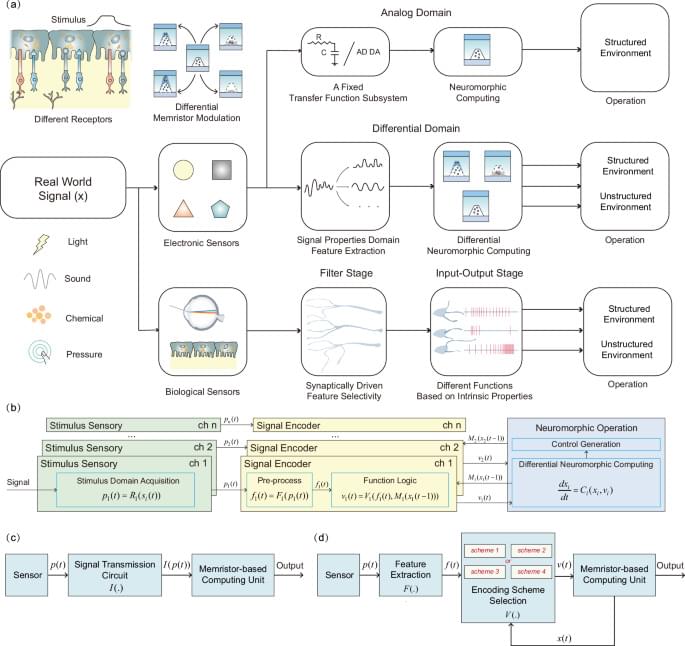

Differential neuromorphic computing, as a memristor-assisted perception method, holds the potential to enhance subsequent decision-making and control processes. Compared with conventional technologies, both the PID control approach and the proposed differential neuromorphic computing share a fundamental principle of smartly adjusting outputs in response to feedback, they diverge significantly in the data manipulation process (Supplementary Discussion 12 and Fig. S26); our method leverages the nonlinear characteristics of the memristor and a dynamic selection scheme to execute more complex data manipulation than linear coefficient-based error correction in PID. Additionally, the intrinsic memory function of memristors in our system enables real-time adaptation to changing environments. This represents a significant advantage compared to the static parameter configuration of PID systems. To perform similar adaptive control functions in tactile experiments, the von Neumann architecture follows a multi-step process involving several data movements: 1. Input data about the piezoresistive film state is transferred to the system memory via an I/O interface. 2. This sensory data is then moved from the memory to the cache. 3. Subsequently, it is forwarded to the Arithmetic Logic Unit (ALU) and waits for processing.4. Historical tactile information is also transferred from the memory to the cache unless it is already present. 5. This historical data is forwarded to the ALU. 6. ALU calculates the current sensory and historical data and returns the updated historical data to the cache. In contrast, our memristor-based approach simplifies this process, reducing it to three primary steps: 1. ADC reads data from the piezoresistive film. 2. ADC reads the current state of the memristor, which represents the historical tactile stimuli. 3. DAC, controlled by FPGA logic, updates the memristor state based on the inputs. This process reduces the costs of operation and enhances data processing efficiency.

In real-world settings, robotic tactile systems are required to elaborate large amounts of tactile data and respond as quickly as possible, taking less than 100 ms, similar to human tactile systems58,59. The current state-of-the-art robotics tactile technologies are capable of elaborating sudden changes in force, such as slip detection, at millisecond levels (from 500 μs to 50 ms)59,60,61,62, and the response time of our tactile system has also reached this detection level. For the visual processing, suppose a vehicle travels 40 km per hour in an urban area and wants control effective for every 1 m. In that case, the requirement translates a maximum allowable response time of 90 ms for the entire processing pipeline, which includes sensors, operating systems, middleware, and applications such as object detection, prediction, and vehicle control63,64. When incorporating our proposed memristor-assisted method with conventional camera systems, the additional time delay includes the delay from filter circuits (less than 1 ms) and the switching time for the memristor device, which ranges from nanoseconds (ns) to even picoseconds (ps)21,65,66,67. Compared to the required overall response time of the pipeline, these additions are negligible, demonstrating the potential of our method application in real-world driving scenarios68. Although our memristor-based perception method meets the response time requirement for described scenarios, our approach faces several challenges that need to be addressed for real-world applications. Apart from the common issues such as variability in device performance and the nonlinear dynamics of memristive responses, our approach needs to overcome the following challenges:

Currently, the modulation voltage applied to memristors is preset based on the external sensory feature, and the control algorithm is based on hard threshold comparison. This setting lacks the flexibility required for diverse real-world environments where sensory inputs and required responses can vary significantly. Therefore, it is crucial to develop a more automatic memristive modulation method along with a control algorithm that can dynamically adjust based on varying application scenarios.

עברית (Hebrew)

עברית (Hebrew)