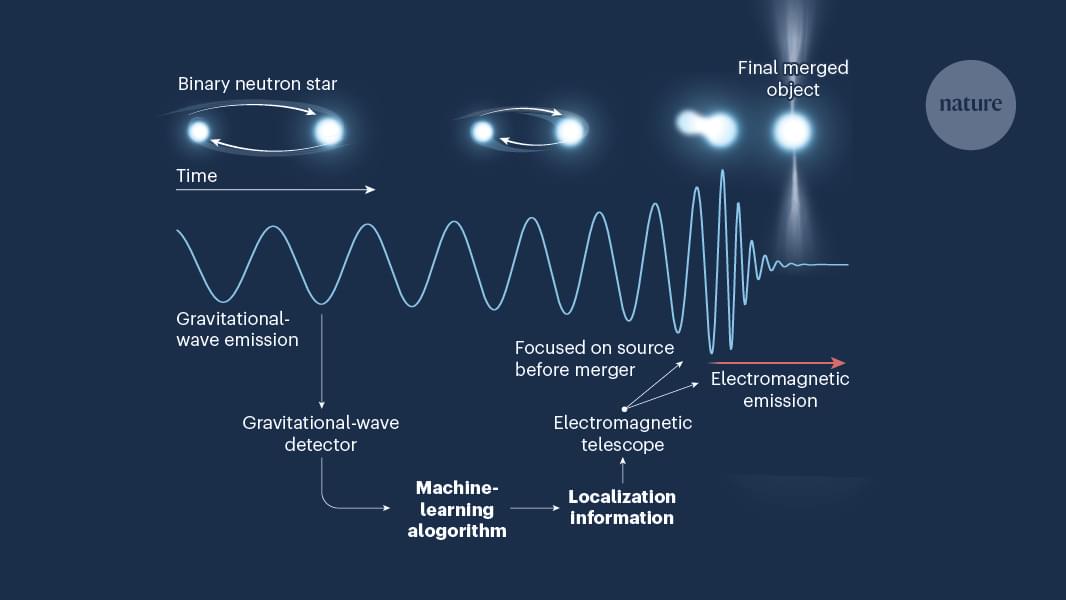

Machine-learning system precisely locates neutron-star mergers.

A fundamental goal of physics is to explain the broadest range of phenomena with the fewest underlying principles. Remarkably, seemingly disparate problems often exhibit identical mathematical descriptions.

For instance, the rate of heat flow can be modeled using an equation very similar to that governing the speed of particle diffusion. Another example involves wave equations, which apply to the behavior of both water and sound. Scientists continuously seek such connections, which are rooted in the principle of the “universality” of underlying physical mechanisms.

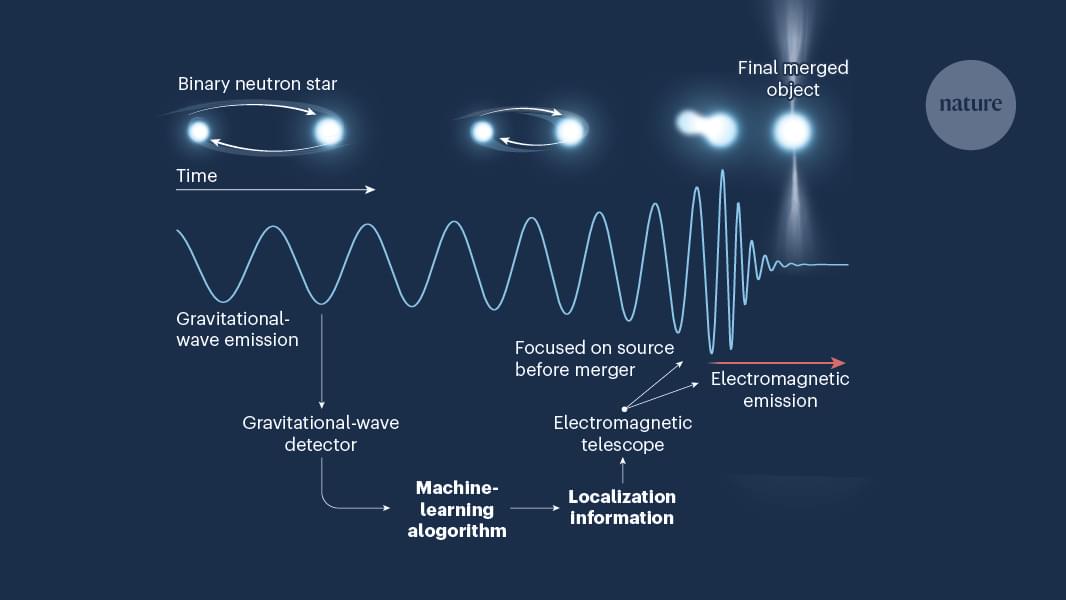

In a study published in the journal Royal Society Open Science, researchers from Osaka University uncovered an unexpected connection between the equations for defects in a crystalline lattice and a well-known formula from electromagnetism.

In human engineering, we design systems to be predictable and controlled. By contrast, nature thrives on systems where simple rules generate rich, emergent complexity. The computational nature of the universe explains how simplicity can generate the complexity we see in natural phenomena. Imagine being able to understand everything about the universe and solve all its mysteries by a computational approach that uses very simple rules. Instead of being limited to mathematical equations, using very basic computational rules, we might be able to figure out and describe everything in the universe, like what happened at the very beginning? What is energy? What’s the nature of dark matter? Is traveling faster than light possible? What is consciousness? Is there free will? How can we unify different theories of physics into one ultimate theory of everything?

This paradigm goes against the traditional notion that complexity in nature must arise from complicated origins. It claims that simplicity in fundamental rules can produce astonishing complexity in behavior. Entering the Wolfram’s physics project: The computational universe!

Thousands of hours have been dedicated to the creation of this video. Producing another episode of this caliber would be difficult without your help. If you would like to see more, please consider supporting me on / disculogic, or via PayPal for a one-time donation at https://paypal.me/Disculogic.

Chapters:

00:00 Intro.

01:48 Fundamentally computational.

08:51 Computational irreducibility.

13:14 Causal invariance.

16:16 Universal computation.

18:44 Spatial dimensions.

21:36 Space curvature.

23:52 Time and causality.

27:12 Energy.

29:38 Quantum mechanics.

31:31 Faster than light travel.

34:56 Dark matter.

36:30 Critiques.

39:15 Meta-framework.

41:19 The ultimate rule.

44:21 Consciousness.

46:00 Free will.

48:02 Meaning and purpose.

49:09 Unification.

55:14 Further analysis.

01:02:30 Credits.

#science #universe #documentary

Although Navier–Stokes equations are the foundation of modern hydrodynamics, adapting them to quantum systems has so far been a major challenge. Researchers from the Faculty of Physics at the University of Warsaw, Maciej Łebek, M.Sc. and Miłosz Panfil, Ph.D., Prof., have shown that these equations can be generalized to quantum systems, specifically quantum liquids, in which the motion of particles is restricted to one dimension.

This discovery opens up new avenues for research into transport in one-dimensional quantum systems. The resulting paper, published in Physical Review Letters, was awarded an Editors’ Suggestion.

Liquids are among the basic states of matter and play a key role in nature and technology. The equations of hydrodynamics, known as the Navier–Stokes equations, describe their motion and interactions with the environment. Solutions to these equations allow us to predict the behavior of fluids under various conditions, from the ocean currents and the blood flow in blood vessels, to the dynamics of quark-gluon plasma on subatomic scales.

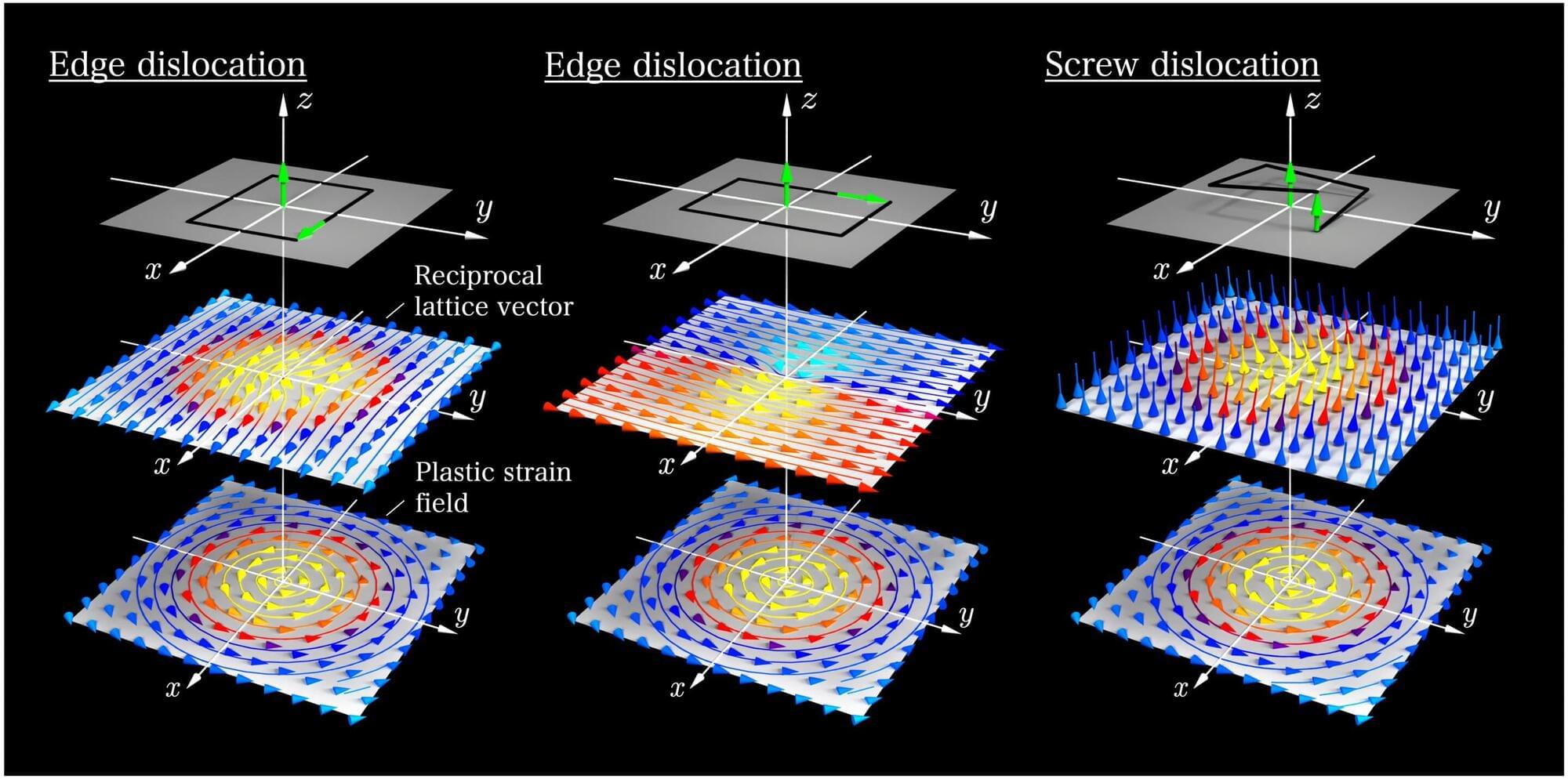

Light was long considered to be a wave, exhibiting the phenomenon of interference in which ripples like those in water waves are generated under specific interactions. Light also bends around corners, resulting in fringing effects, which is termed diffraction. The energy of light is associated with its intensity and is proportional to the square of the amplitude of the electric field, but in the photoelectric effect, the energy of emitted electrons is found to be proportional to the frequency of radiation.

This observation was first made by Philipp Lenard, who did initial work on the photoelectric effect. In order to explain this, in 1905, Einstein suggested in Annalen der Physik that light comprises quantized packets of energy, which came to be called photons. It led to the theory of the dual nature of light, according to which light can behave like a wave or a particle depending on its interactions, paving the way for the birth of quantum mechanics.

Although Einstein’s work on photons found broader acceptance, eventually leading to his Nobel Prize in Physics, Einstein was not fully convinced. He wrote in a 1951 letter, “All the 50 years of conscious brooding have brought me no closer to the answer to the question: What are light quanta?”

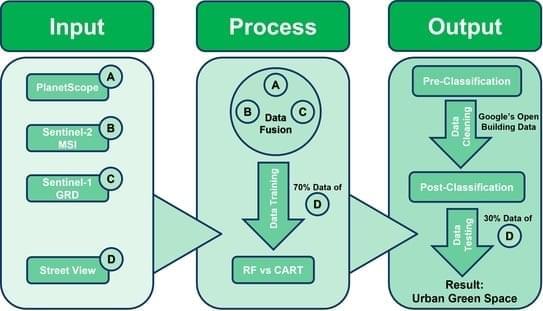

Jakarta holds the distinction of being the largest capital city among ASEAN countries and ranks as the second-largest metropolitan area in the world, following Tokyo. Despite numerous studies examining the diverse urban land use and land cover patterns within the city, the recent state of urban green spaces has not been adequately assessed and mapped precisely. Most previous studies have primarily focused on urban built-up areas and manmade structures. In this research, the first-ever detailed map of Jakarta’s urban green spaces as of 2023 was generated, with a resolution of three meters. This study employed a combination of supervised classification and evaluated two machine learning algorithms to achieve the highest accuracy possible.

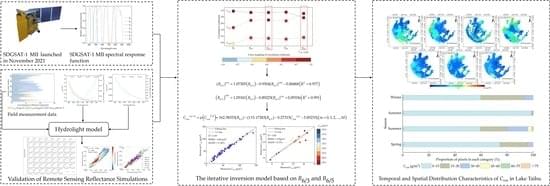

Inland waters consist of multiple concentrations of constituents, and solving the interference problem of chlorophyll-a and colored dissolved organic matter (CDOM) can help to accurately invert total suspended matter concentration (Ctsm). In this study, according to the characteristics of the Multispectral Imager for Inshore (MII) equipped with the first Sustainable Development Goals Science Satellite (SDGSAT-1), an iterative inversion model was established based on the iterative analysis of multiple linear regression to estimate Ctsm. The Hydrolight radiative transfer model was used to simulate the radiative transfer process of Lake Taihu, and it analyzed the effect of three component concentrations on remote sensing reflectance.

We speak with Sakana AI, who are building nature-inspired methods that could fundamentally transform how we develop AI systems.

The guests include Chris Lu, a researcher who recently completed his DPhil at Oxford University under Prof. Jakob Foerster’s supervision, where he focused on meta-learning and multi-agent systems. Chris is the first author of the DiscoPOP paper, which demonstrates how language models can discover and design better training algorithms. Also joining is Robert Tjarko Lange, a founding member of Sakana AI who specializes in evolutionary algorithms and large language models. Robert leads research at the intersection of evolutionary computation and foundation models, and is completing his PhD at TU Berlin on evolutionary meta-learning. The discussion also features Cong Lu, currently a Research Scientist at Google DeepMind’s Open-Endedness team, who previously helped develop The AI Scientist and Intelligent Go-Explore.

SPONSOR MESSAGES:

***

CentML offers competitive pricing for GenAI model deployment, with flexible options to suit a wide range of models, from small to large-scale deployments. Check out their super fast DeepSeek R1 hosting!

https://centml.ai/pricing/

Tufa AI Labs is a brand new research lab in Zurich started by Benjamin Crouzier focussed on o-series style reasoning and AGI. They are hiring a Chief Engineer and ML engineers. Events in Zurich.

Goto https://tufalabs.ai/

***

Rather than simply scaling up models with more parameters and data, they’re drawing inspiration from biological evolution to create more efficient and creative AI systems. The team explains how their Tokyo-based startup, founded in 2023 with $30 million in funding, aims to harness principles like natural selection and emergence to develop next-generation AI.

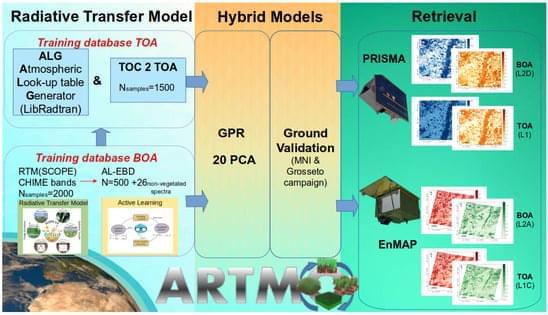

Satellite-based optical remote sensing from missions such as ESA’s Sentinel-2 (S2) have emerged as valuable tools for continuously monitoring the Earth’s surface, thus making them particularly useful for quantifying key cropland traits in the context of sustainable agriculture [1]. Upcoming operational imaging spectroscopy satellite missions will have an improved capability to routinely acquire spectral data over vast cultivated regions, thereby providing an entire suite of products for agricultural system management [2]. The Copernicus Hyperspectral Imaging Mission for the Environment (CHIME) [3] will complement the multispectral Copernicus S2 mission, thus providing enhanced services for sustainable agriculture [4, 5]. To use satellite spectral data for quantifying vegetation traits, it is crucial to mitigate the absorption and scattering effects caused by molecules and aerosols in the atmosphere from the measured satellite data. This data processing step, known as atmospheric correction, converts top-of-atmosphere (TOA) radiance data into bottom-of-atmosphere (BOA) reflectance, and it is one of the most challenging satellite data processing steps e.g., [6, 7, 8]. Atmospheric correction relies on the inversion of an atmospheric radiative transfer model (RTM) leading to the obtaining of surface reflectance, typically through the interpolation of large precomputed lookup tables (LUTs) [9, 10]. The LUT interpolation errors, the intrinsic uncertainties from the atmospheric RTMs, and the ill posedness of the inversion of atmospheric characteristics generate uncertainties in atmospheric correction [11]. Also, usually topographic, adjacency, and bidirectional surface reflectance corrections are applied sequentially in processing chains, which can potentially accumulate errors in the BOA reflectance data [6]. Thus, despite its importance, the inversion of surface reflectance data unavoidably introduces uncertainties that can affect downstream analyses and impact the accuracy and reliability of subsequent products and algorithms, such as vegetation trait retrieval [12]. To put it another way, owing to the critical role of atmospheric correction in remote sensing, the accuracy of vegetation trait retrievals is prone to uncertainty when atmospheric correction is not properly performed [13].

Although advanced atmospheric correction schemes became an integral part of the operational processing of satellite missions e.g., [9,14,15], standardised exhaustive atmospheric correction schemes in drone, airborne, or scientific satellite missions remain less prevalent e.g., [16,17]. The complexity of atmospheric correction further increases when moving from multispectral to hyperspectral data, where rigorous atmospheric correction needs to be applied to hundreds of narrow contiguous spectral bands e.g., [6,8,18]. For this reason, and to bypass these challenges, several studies have instead proposed to infer vegetation traits directly from radiance data at the top of the atmosphere [12,19,20,21,22,23,24,25,26].