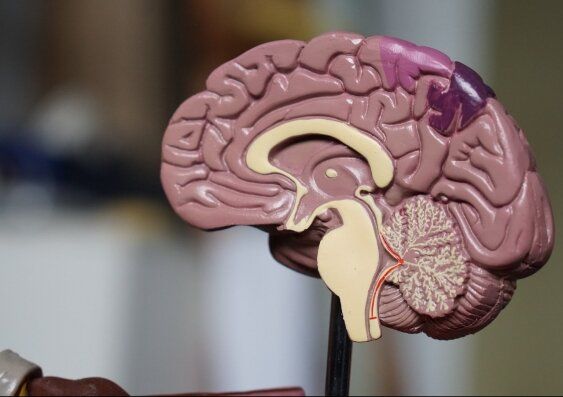

David Sinclair wants to slow down and ultimately reverse aging. Sinclair sees aging as a disease and he is convinced aging is caused by epigenetic changes, abnormalities that occur when the body’s cells process extra or missing pieces of DNA. This results in the loss of the information that keeps our cells healthy. This information also tells the cells which genes to read. David Sinclair’s book: “Lifespan, why we age and why we don’t have to”, he describes the results of his research, theories and scientific philosophy as well as the potential consequences of the significant progress in genetic technologies.

At present, researchers are only just beginning to understand the biological basis of aging even in relatively simple and short-lived organisms such as yeast. Sinclair however, makes a convincing argument for why the life-extension technologies will eventually offer possibilities of life prolongation using genetic engineering.

He and his team recently developed two artificial intelligence algorithms that predict biological age in mice and when they will die. This will pave the way for similar machine learning models in people.

The loss of epigenetic information is likely the root cause of aging. By analogy, If DNA is the digital information on a compact disc, then aging is due to scratches. What we are searching for, is the polish.

Every time a cell divides, the DNA strands at the ends of your chromosomes replicate in order to copy all the genetic information to each new cell, and this process is not perfect. Over time, however, the ends of your chromosomes can become scrambled.

However, the progress in genetic engineering has proved that these changes can be reversed even at the cellular level, and it is possible to restore the information in our cells, thus improving the functioning of our organs and slowing the aging process.

#Aging #DavidSinclair #Lifespan