Many believe AI will bring the end of the world, but Emad Mostaque (CEO of Stability AI) believes AI (and humans) can.

Recent progress in AI has been startling. Barely a week’s gone by without a new algorithm, application, or implication making headlines. But OpenAI, the source of much of the hype, only recently completed their flagship algorithm, GPT-4, and according to OpenAI CEO Sam Altman, its successor, GPT-5, hasn’t begun training yet.

It’s possible the tempo will slow down in coming months, but don’t bet on it. A new AI model as capable as GPT-4, or more so, may drop sooner than later.

This week, in an interview with Will Knight, Google DeepMind CEO Demis Hassabis said their next big model, Gemini, is currently in development, “a process that will take a number of months.” Hassabis said Gemini will be a mashup drawing on AI’s greatest hits, most notably DeepMind’s AlphaGo, which employed reinforcement learning to topple a champion at Go in 2016, years before experts expected the feat.

AI applications are summarizing articles, writing stories and engaging in long conversations — and large language models are doing the heavy lifting.

A large language model, or LLM, is a deep learning algorithm that can recognize, summarize, translate, predict and generate text and other forms of content based on knowledge gained from massive datasets.

Large language models are among the most successful applications of transformer models. They aren’t just for teaching AIs human languages, but for understanding proteins, writing software code, and much, much more.

Join top executives in San Francisco on July 11–12 and learn how business leaders are getting ahead of the generative AI revolution. Learn More

New products like ChatGPT have captivated the public, but what will the actual money-making applications be? Will they offer sporadic business success stories lost in a sea of noise, or are we at the start of a true paradigm shift? What will it take to develop AI systems that are actually workable?

To chart AI’s future, we can draw valuable lessons from the preceding step-change advance in technology: the Big Data era.

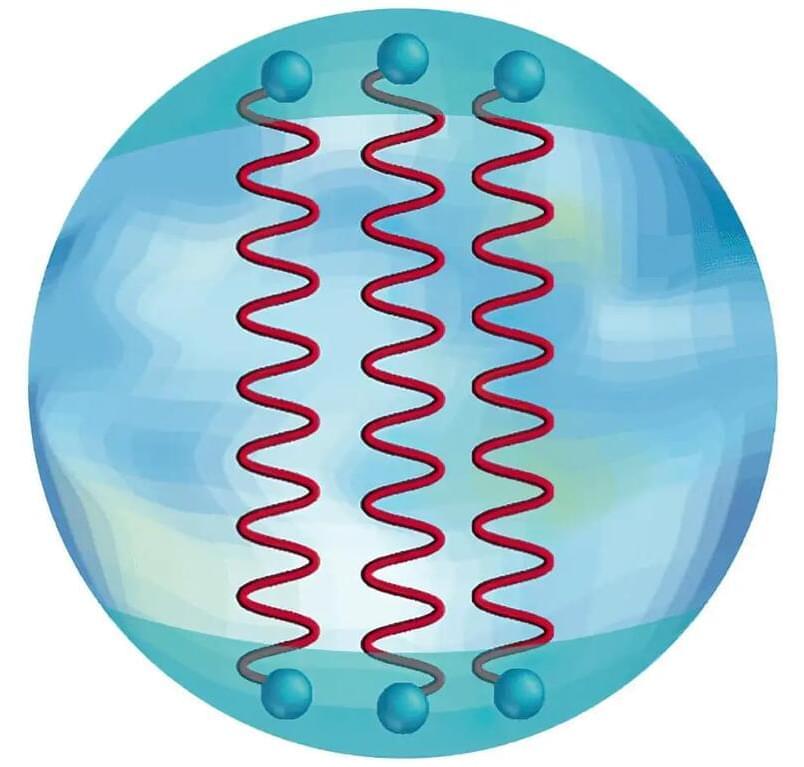

Scientists at Brookhaven National Laboratory have used two-dimensional condensed matter physics to understand the quark interactions in neutron stars, simplifying the study of these densest cosmic entities. This work helps to describe low-energy excitations in dense nuclear matter and could unveil new phenomena in extreme densities, propelling advancements in the study of neutron stars and comparisons with heavy-ion collisions.

Understanding the behavior of nuclear matter—including the quarks and gluons that make up the protons and neutrons of atomic nuclei—is extremely complicated. This is particularly true in our world, which is three dimensional. Mathematical techniques from condensed matter physics that consider interactions in just one spatial dimension (plus time) greatly simplify the challenge. Using this two-dimensional approach, scientists solved the complex equations that describe how low-energy excitations ripple through a system of dense nuclear matter. This work indicates that the center of neutron stars, where such dense nuclear matter exists in nature, may be described by an unexpected form.

Keep Your Digital Life Private and Stay Safe Online: https://nordvpn.com/safetyfirst.

Welcome to an enlightening journey through the 7 Stages of AI, a comprehensive exploration into the world of artificial intelligence. If you’ve ever wondered about the stages of AI, or are interested in how the 7 stages of artificial intelligence shape our technological world, this video is your ultimate guide.

Artificial Intelligence (AI) is revolutionizing our daily lives and industries across the globe. Understanding the 7 stages of AI, from rudimentary algorithms to advanced machine learning and beyond, is vital to fully grasp this complex field. This video delves deep into each stage, providing clear explanations and real-world examples that make the concepts accessible for everyone, regardless of their background.

Throughout this video, we demystify the fascinating progression of AI, starting from the basic rule-based systems, advancing through machine learning, deep learning, and the cutting-edge concept of self-aware AI. Not only do we discuss the technical aspects of these stages, but we also explore their societal implications, making this content valuable for technologists, policy makers, and curious minds alike.

Leveraging our in-depth knowledge, we illuminate the intricate complexities of artificial intelligence’s 7 stages. By the end of the video, you’ll have gained a robust understanding of the stages of AI, the applications and potential of each stage, and the future trajectory of this game-changing technology.

#artificialintelligence.

#ai.

#airevolution.

Subscribe for more!

Humans rely increasingly on sensors to address grand challenges and to improve quality of life in the era of digitalization and big data. For ubiquitous sensing, flexible sensors are developed to overcome the limitations of conventional rigid counterparts. Despite rapid advancement in bench-side research over the last decade, the market adoption of flexible sensors remains limited. To ease and to expedite their deployment, here, we identify bottlenecks hindering the maturation of flexible sensors and propose promising solutions. We first analyze challenges in achieving satisfactory sensing performance for real-world applications and then summarize issues in compatible sensor-biology interfaces, followed by brief discussions on powering and connecting sensor networks.

Italian fashion start-up Cap_able has launched a collection of knitted clothing that protects the wearer’s biometric data without the need to cover their face.

Named Manifesto Collection, the clothing features various patterns developed by artificial intelligence (AI) algorithms to shield the wearer’s facial identity and instead identify them as animals.

Cap_able designed the clothing with patterns – known as adversarial patches – to deceive facial recognition software in real-time.