Ticking clocks and flashing fireflies that start out of sync will fall into sync, a tendency that has been observed for centuries. A discovery two decades ago therefore came as a surprise: the dynamics of identical coupled oscillators can also be asynchronous. The ability to fall in and out of sync, a behavior dubbed a chimera state, is generic to identical coupled oscillators and requires only that the coupling is nonlocal. Now Yasuhiro Yamada and Kensuke Inaba of NTT Basic Research Laboratories in Japan show that this behavior can be analyzed using a lattice model (the XY model) developed to understand antiferromagnetism [1]. Besides a pleasing correspondence, Yamada and Inaba say that their finding offers a path to study the partial synchronization of neurons that underlie brain function and dysfunction.

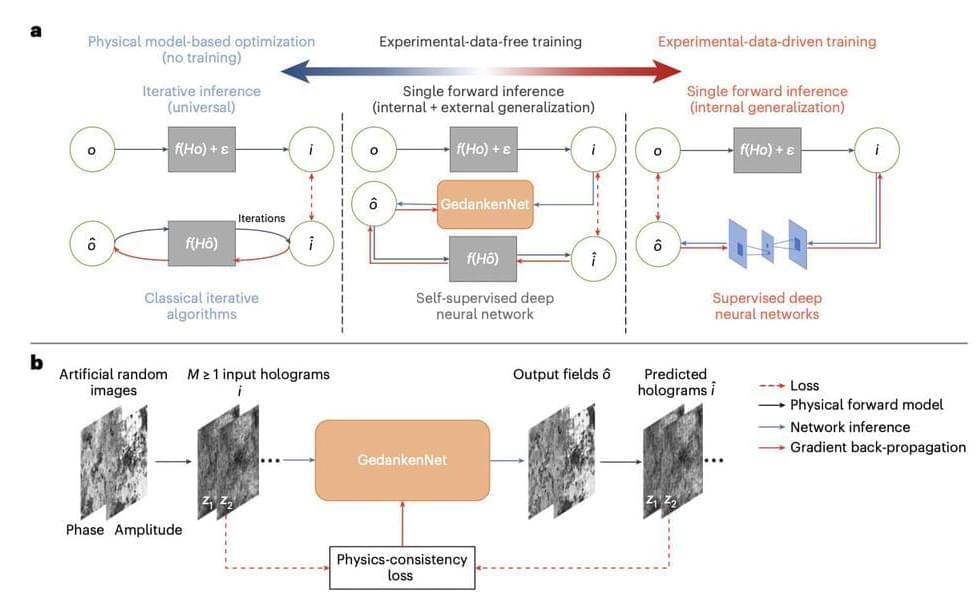

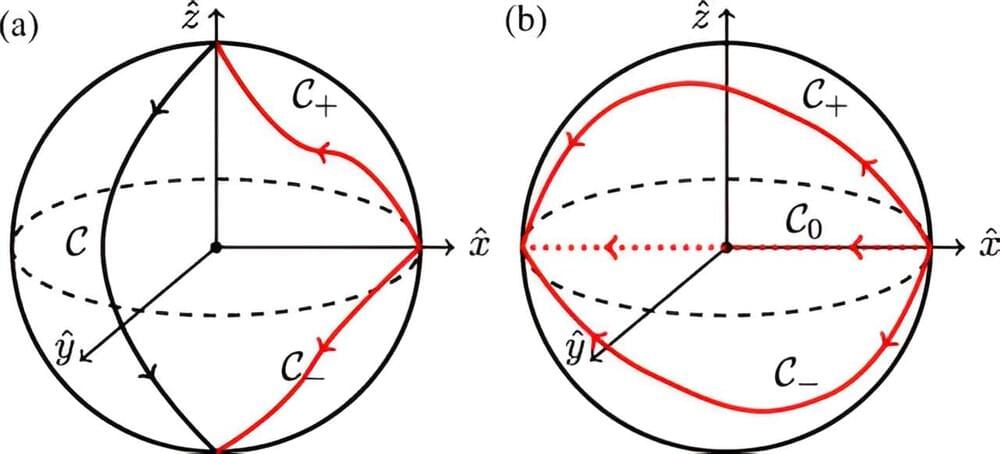

The chimera states of a system are typically analyzed by looking at how the relative phases of the coupled oscillators fall in and out of sync. But that approach struggles to describe the system when the system contains distantly separated pockets of synchrony or when there are nontrivial configurations of the oscillators, such as twisted or spiral waves. It also requires knowledge of the network’s structure and the oscillators’ equations of motion.

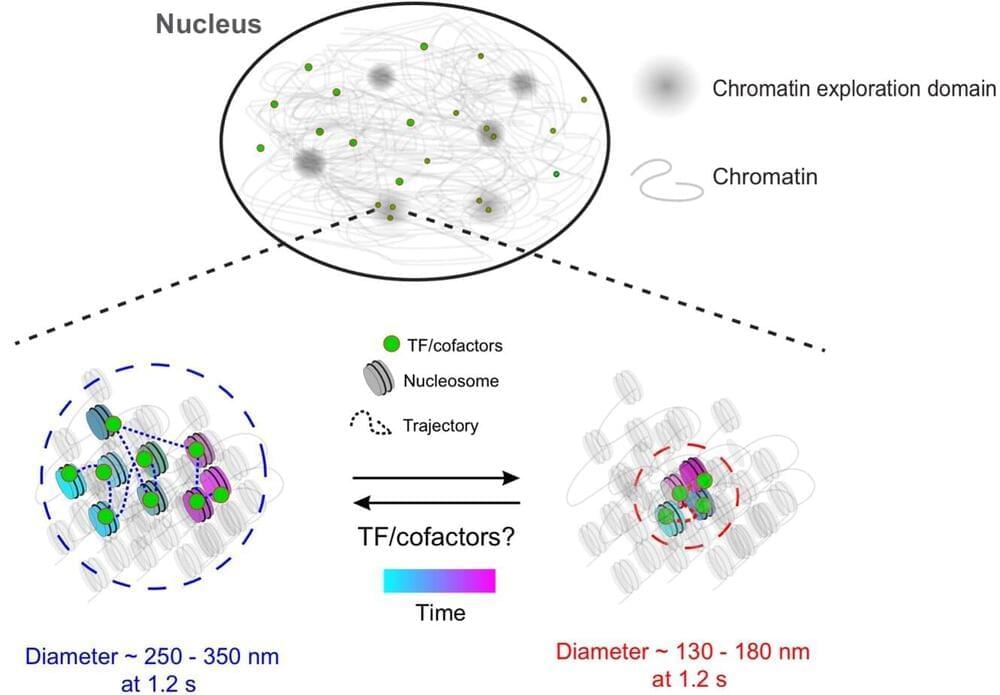

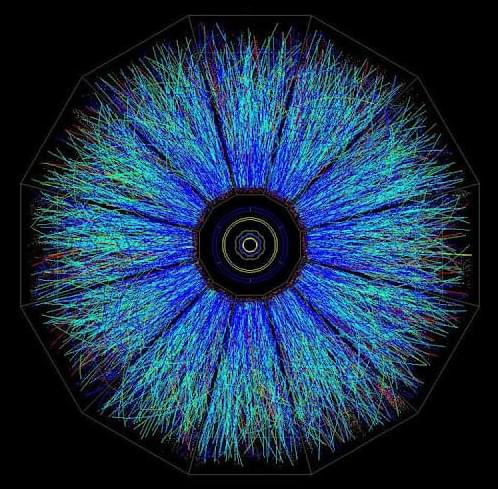

In seeking an alternative approach, Yamada and Inaba turned to a two-dimensional lattice model used to tackle phase transitions in 2D condensed-matter systems. A crucial ingredient in that model is a topological defect called a vortex. Yamada and Inaba found that they could embody the asynchronous dynamics of pairs of oscillators by formulating the problem in terms of an analogous quantity that they call pseudovorticity, whose absence indicates synchrony and whose presence indicates asynchrony. Their calculations show that their pseudo-vorticity-containing lattice model can successfully recover the chimera state behavior of a simulated neural network made up of 200 model oscillators of a type commonly used to study brain activity.