http://www.blogtalkradio.com/unlimited-realities-with-lisa-z…lth-future

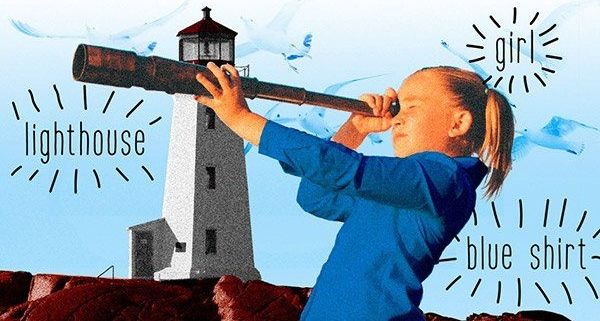

Computer scientists at MIT have developed a machine-learning system that can identify objects in an image based on a spoken description of the image.

Typical speech recognition systems like Google Voice and Siri rely on transcriptions of thousands of hours of speech recordings, which are then used to map speech signals to specific words.

Still in its early stages, the MIT system learns words from recorded speech clips and objects in images and then links them. Several hundred different works and objects can be recognized so far, with expectations that future versions can advance to a larger scale.

This question originally appeared on Quora — the place to gain and share knowledge, empowering people to learn from others and better understand the world. You can follow Quora on Twitter, Facebook, and Google+. More questions:

Disruptive solutions that are poised to change the world — a special report produced by Scientific American in collaboration with the World Economic Forum.

Scientific American is the essential guide to the most awe-inspiring advances in science and technology, explaining how they change our understanding of the world and shape our lives.