📱 🔭 📡 Empieza un nuevo capítulo de #Avances, donde junto a Daniel Silva conocemos más sobre tecnología e innovación.

Category: futurism – Page 1,179

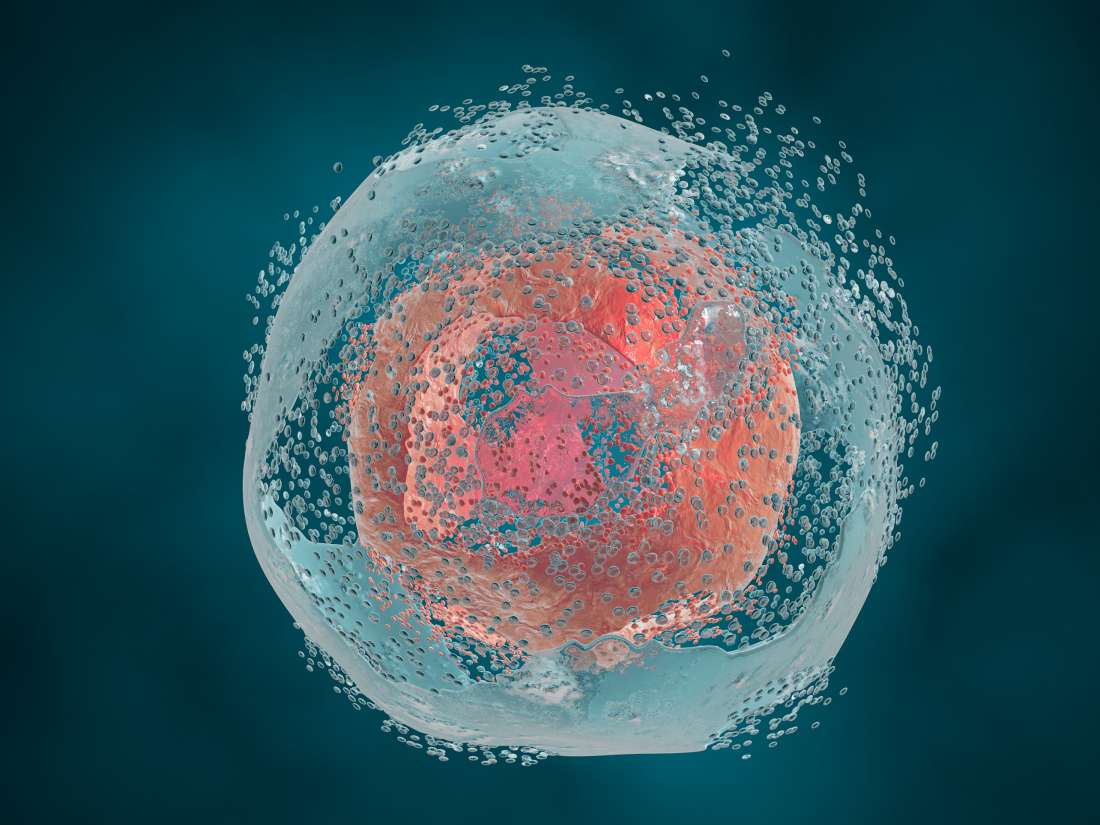

Journal Club June 2018 — Age-related changes to the nuclear membrane

For the June edition of Journal Club, we will be taking a look at the recent paper entitled “Changes at the nuclear lamina alter binding of pioneer factor Foxa2 in aged liver”.

If you like watching these streams and/or would like to participate in future streams, please consider supporting us by becoming a Lifespan Hero: https://www.lifespan.io/hero

This Table Saw Could Save Your Fingers From Getting Amputated

Sawed-off fingers are a thing of the past with this technology. Here’s how SawStop does it. (via @ Seeker)

A Second Magnetic Field Surrounding Our Planet Has Been Detected

Earth’s Second Magnetic Field.