Did you know that you were being scanned via that rather colorful GUI as seen from the back of the car?

Panasonic JS970WP0118OS3 panasonic all in one terminals stingray III pro, loprofile capacitive, SSD, PWR, WIN7 PAN-JS970WP0118OS3.

ALON — Transparent Aluminum — is a ceramic composed of Aluminium, Oxygen and Nitrogen. Transparent Aluminum, was once pure science fiction, a technical term used in a Star Trek Movie from the 80’s.

In the movie Star Trek 4 The Voyage Home, Captain Kirk and his team, go back in time to acquire 2 whales from the past and transport them back to the future. Scotty needed some materials to make a holding tank for whales on his ship, but had no money to pay for the materials.

So Scotty uses his knowledge of 23 third century technology and the manufacturers computer and programs in, how to make the Transparent Aluminum Molecule.

Transparent Aluminum or Aluminum Oxynitride, also known as ALON, is much stronger than Standard Glass and over time will become cheaper to make, but until then will most likely be used for NASA & the Military.

Thanks for watching

____________________________________________________________________

A research team lead by Osaka University demonstrated how information encoded in the circular polarization of a laser beam can be translated into the spin state of an electron in a quantum dot, each being a quantum bit and a quantum computer candidate. The achievement represents a major step towards a “quantum internet,” in which future computers can rapidly and securely send and receive quantum information.

Quantum computers have the potential to vastly outperform current systems because they work in a fundamentally different way. Instead of processing discrete ones and zeros, quantum information, whether stored in electron spins or transmitted by laser photons, can be in a superposition of multiple states simultaneously. Moreover, the states of two or more objects can become entangled, so that the status of one cannot be completely described without this other. Handling entangled states allow quantum computers to evaluate many possibilities simultaneously, as well as transmit information from place to place immune from eavesdropping.

However, these entangled states can be very fragile, lasting only microseconds before losing coherence. To realize the goal of a quantum internet, over which coherent light signals can relay quantum information, these signals must be able to interact with electron spins inside distant computers.

Mechanical engineers have discovered a way to produce more electricity from heat than thought possible by creating a silicon chip, also known as a ‘device,’ that converts more thermal radiation into electricity. This could lead to devices such as laptop computers and cellphones with much longer battery life and solar panels that are much more efficient at converting radiant heat to energy.

Sometimes the best discoveries happen when scientists least expect it. While trying to replicate another team’s finding, Stanford physicists recently stumbled upon a novel form of magnetism, predicted but never seen before, that is generated when two honeycomb-shaped lattices of carbon are carefully stacked and rotated to a special angle.

The authors suggest the magnetism, called orbital ferromagnetism, could prove useful for certain applications, such as quantum computing. The group describes their finding in the July 25 issue of the journal Science.

“We were not aiming for magnetism. We found what may be the most exciting thing in my career to date through partially targeted and partially accidental exploration,” said study leader David Goldhaber-Gordon, a professor of physics at Stanford’s School of Humanities and Sciences. “Our discovery shows that the most interesting things turn out to be surprises sometimes.”

Abstract: The large, error-correcting quantum computers envisioned today could be decades away, yet experts are vigorously trying to come up with ways to use existing and near-term quantum processors to solve useful problems despite limitations due to errors or “noise.”

A key envisioned use is simulating molecular properties. In the long run, this can lead to advances in materials improvement and drug discovery. But not with noisy calculations confusing the results.

Now, a team of Virginia Tech chemistry and physics researchers have advanced quantum simulation by devising an algorithm that can more efficiently calculate the properties of molecules on a noisy quantum computer. Virginia Tech College of Science faculty members Ed Barnes, Sophia Economou, and Nick Mayhall recently published a paper in Nature Communications detailing the advancement.

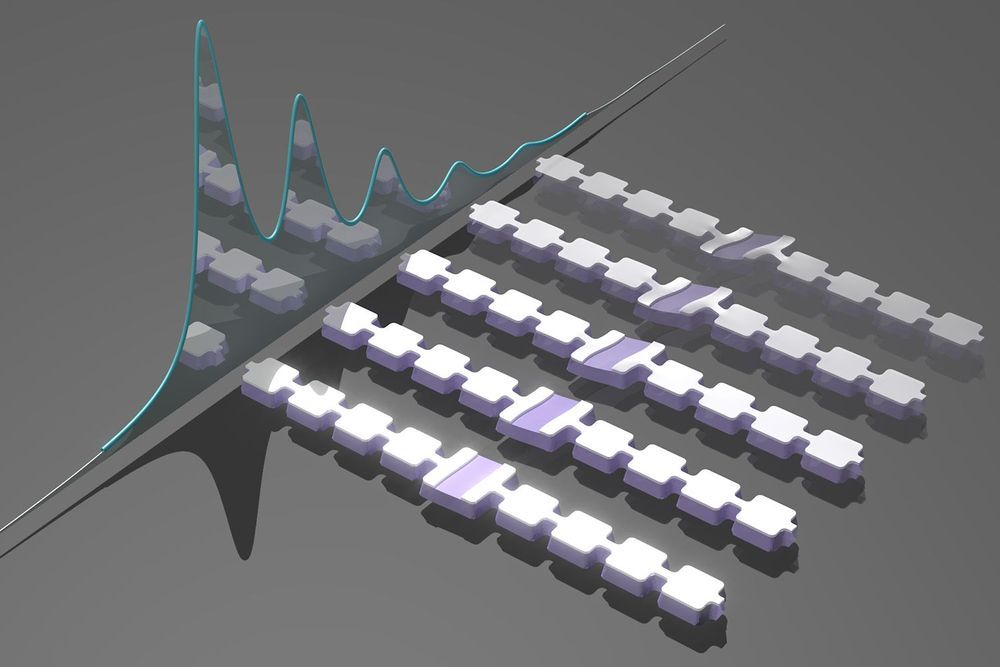

Researchers have designed a tile set of DNA molecules that can carry out robust reprogrammable computations to execute six-bit algorithms and perform a variety of simple tasks. The system, which works thanks to the self-assembly of DNA strands designed to fit together in different ways while executing the algorithm, is an important milestone in constructing a universal DNA-based computing device.

The new system makes use of DNA’s ability to be programmed through the arrangement of its molecules. Each strand of DNA consists of a backbone and four types of molecules known as nucleotide bases – adenine, thymine, cytosine, and guanine (A, T, C, and G) – that can be arranged in any order. This order represents information that can be used by biological cells or, as in this case, by artificially engineered DNA molecules. The A, T, C, and G have a natural tendency to pair up with their counterparts: A base pairs with T, and C pairs with G. And a sequence of bases pairs up with a complementary sequence: ATTAGCA pairs up with TGCTAAT (in the reverse orientation), for example.

The DNA tile.

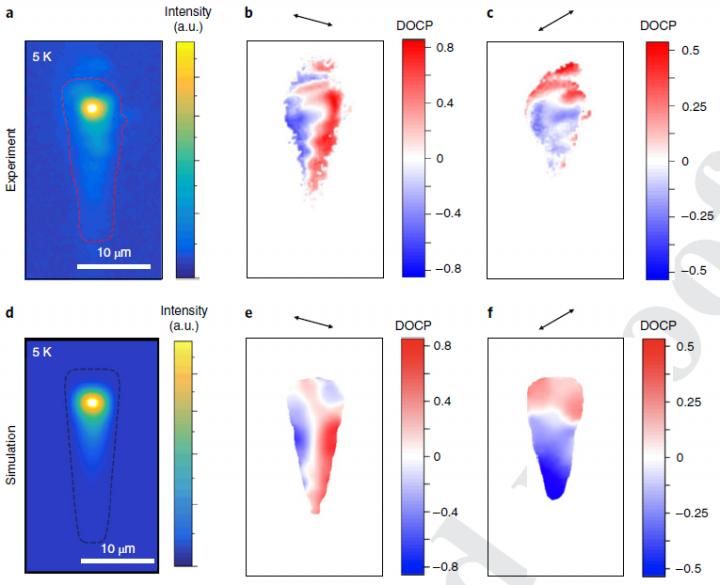

An international research team has studied how photons travel in the plane of the world’s thinnest semiconductor crystal. The results of the physicists’ work open the way to the creation of monoatomic optical transistors — components for quantum computers, potentially capable of making calculations at the speed of light.

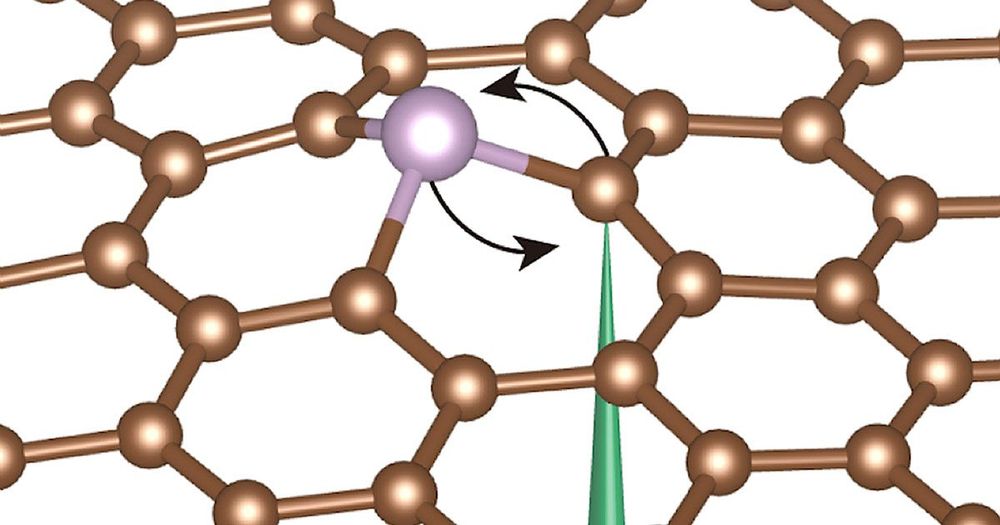

The fine art of adding impurities to silicon wafers lies at the heart of semiconductor engineering and, with it, much of the computer industry. But this fine art isn’t yet so finely tuned that engineers can manipulate impurities down to the level of individual atoms.

As technology scales down to the nanometer size and smaller, though, the placement of individual impurities will become increasingly significant. Which makes interesting the announcement last month that scientists can now rearrange individual impurities (in this case, single phosphorous atoms) in a sheet of graphene by using electron beams to knock them around like croquet balls on a field of grass.

The finding suggests a new vanguard of single-atom electronic engineering. Says research team member Ju Li, professor of nuclear science and engineering at MIT, gone are the days when individual atoms can only be moved around mechanically—often clumsily on the tip of a scanning tunneling microscope.