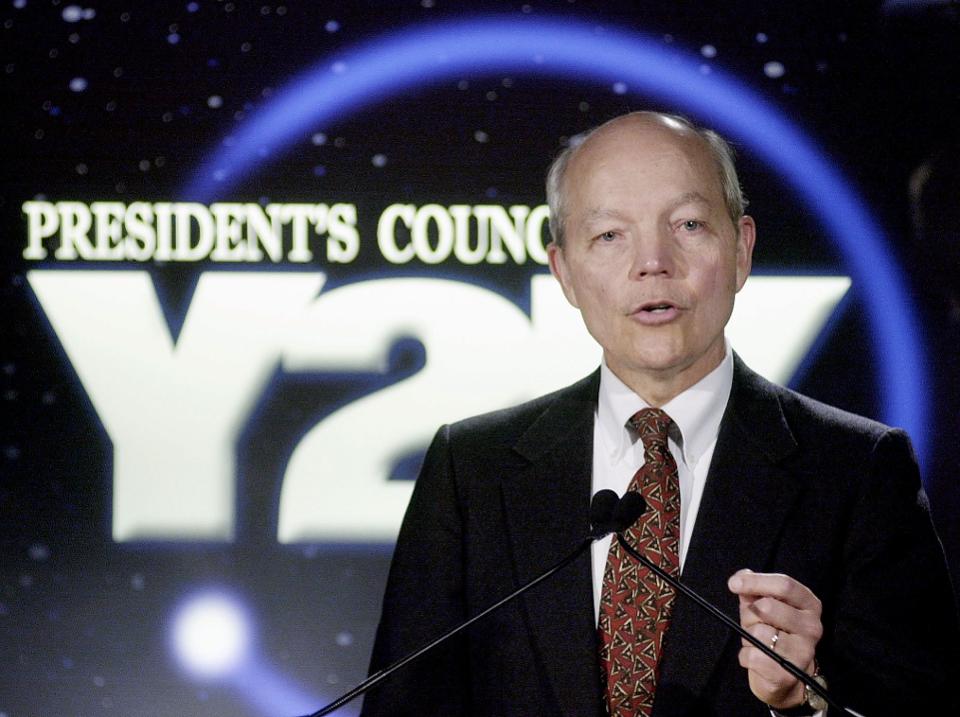

For a time 20 years ago, millions of people, including corporate chiefs and government leaders, feared that the internet was going to crash and shatter on New Year’s Eve and bring much of civilization crumbling down with it. This was all because computers around the world weren’t equipped to deal with the fact of the year 2000. Their software thought of years as two digits. When the year 99 gave way to the year 00, data would behave as if it were about the year 1900, a century before, and system upon system in an almost infinite chain of dominoes would fail. Billions were spent trying to prepare for what seemed almost inevitable.

Twenty years ago, the world feared that a technological doomsday was nigh. It wasn’t, but Y2K had a lot of prescient things to say about how we interact with tech.