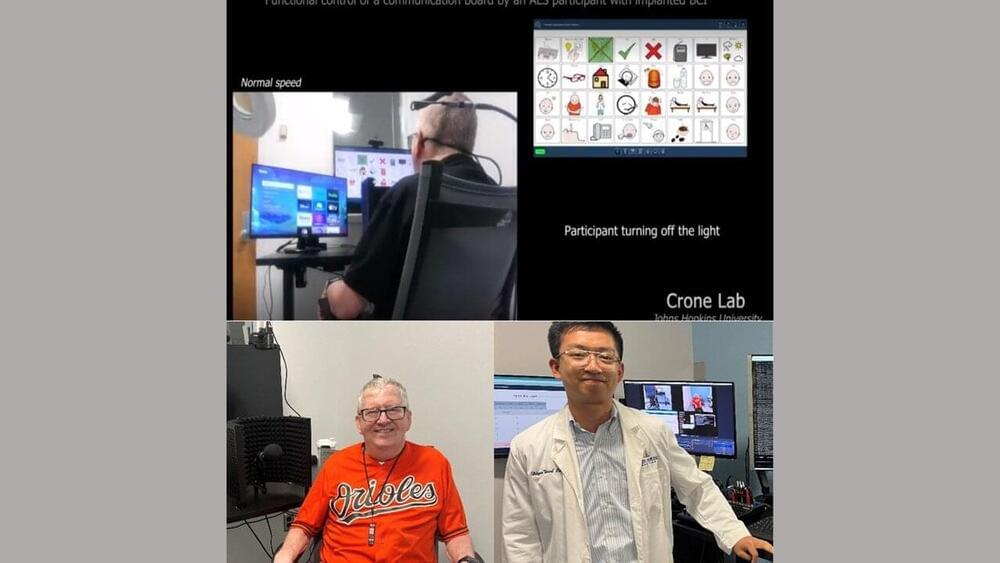

It’s the day after the Baltimore Orioles clinched the American League East Championship with their 100th win of the season, and lifelong fan Tim Evans is showing his pride on his sleeve.

“It’s so great,” Evans, 62, says with a huge smile, wearing his orange O’s jersey.

The last time the Orioles won the AL East was in 2014, the same year Evans was diagnosed with amyotrophic lateral sclerosis (ALS), a progressive nervous system disease that causes muscle weakness and loss of motor and speech functions. Evans currently has severe speech and swallowing problems. He can talk slowly, but it’s hard for most people to understand him.