Phosphorus, the element critical for life´s origin and life on Earth, may be even Venus.

Scientists studying the origin of life in the universe often focus on a few critical elements, particularly carbon, hydrogen, and oxygen. But two new papers highlight the importance of phosphorus for biology: an assessment of where things stand with a recent claim about possible life in the clouds of Venus, and a look at how reduced phosphorus compounds produced by lightning might have been critical for life early in our own planet’s history.

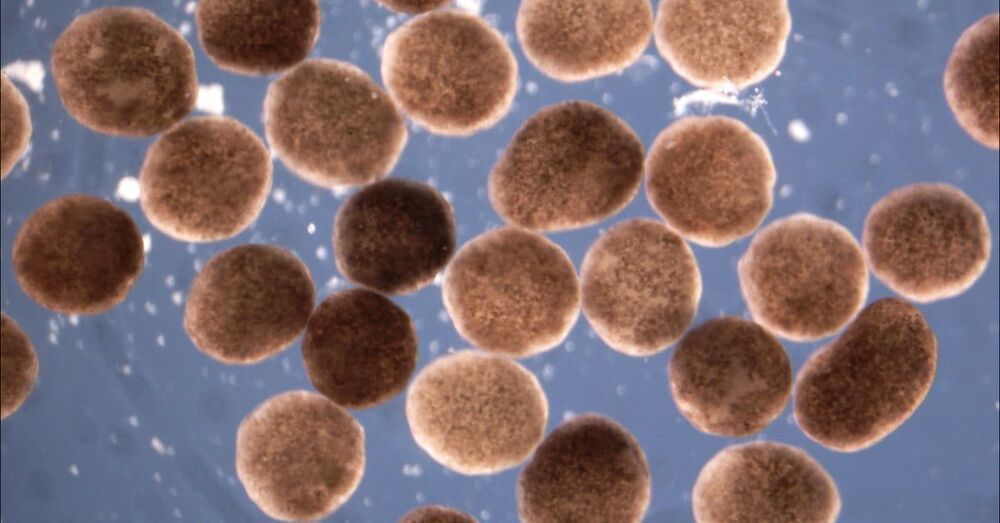

First a little biochemistry: Phosphine is a reduced phosphorus compound with one phosphorus atom and three hydrogen atoms. Phosphorus is also found in its reduced form in the phosphide mineral schreibersite, in which the phosphorus atom binds to three metal atoms (either iron or nickel). In its reduced form, phosphorus is much more reactive and useful for life than is phosphate, where the phosphorus atom binds to four oxygen atoms. Phosphorus is also the element that is most enriched in biological molecules as compared to non-biological molecules, so it’s not a bad place to start when you’re hunting for life.

In the second of the new papers, Benjamin Hess from Yale University and colleagues highlight the contribution of lightning as a source of reduced phosphorus compounds such as schreibersite. It has long been recognized that meteorites supplied much of the reduced phosphorus needed for the origin of life on Earth. But Hess thinks the contribution of lightning has been underestimated. For one thing, lightning was much more common early in our planet’s history. The authors calculate that it could have produced up to 10000 kilograms of reduced phosphorus compounds per year—which may have been enough to jump-start life, especially because we don’t know how much of the reduced phosphorus from meteorites actually survives (in that form) the impact on Earth.