In the novel-turned-movie Ready Player One by Ernest Cline, the protagonist escapes to an online realm aptly called OASIS. Instrumental to the OASIS experience is his haptic (relating to sense of touch) bodysuit, which enables him to move through and interact with the virtual world with his body. He can even activate tactile sensations to feel every gut punch, or a kiss from a badass online girl.

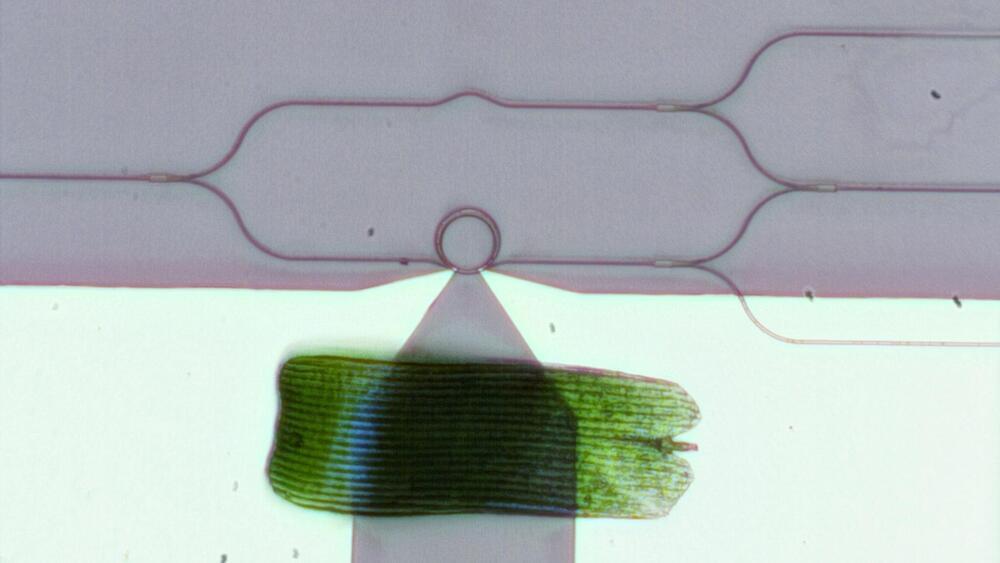

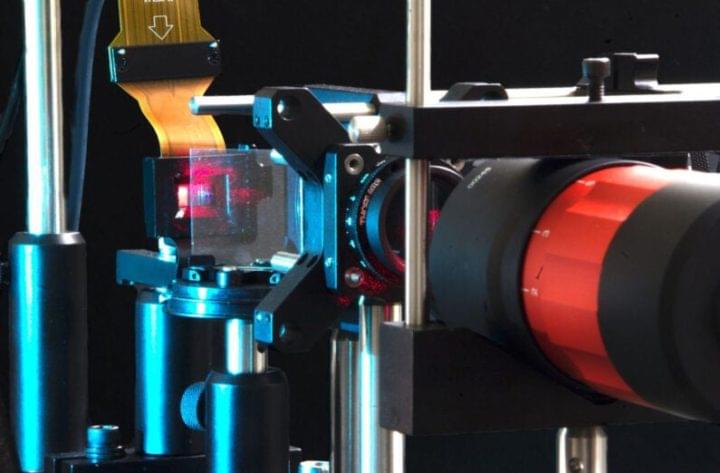

While no such technology is commercially available yet, the platform Meta, formerly known as Facebook, is in the early stages of creating haptic gloves to bring the virtual world to our fingertips. These gloves have been in the works for the past seven years, the company recently said, and there’s still a few more to go.

These gloves would allow the wearer to not only interact with and control the virtual world, but experience it in a way similar to how one experiences the physical world. The wearer would use the gloves in tandem with a headset for AR or VR. A video posted by Meta in a blog shows two users having a remote thumb-wrestling match. In their VR headsets, they see a pair of disembodied hands reflecting the motions that their own hands are making. In their gloves, they feel every squeeze and twitch of their partner’s hand—at least that’s the idea.