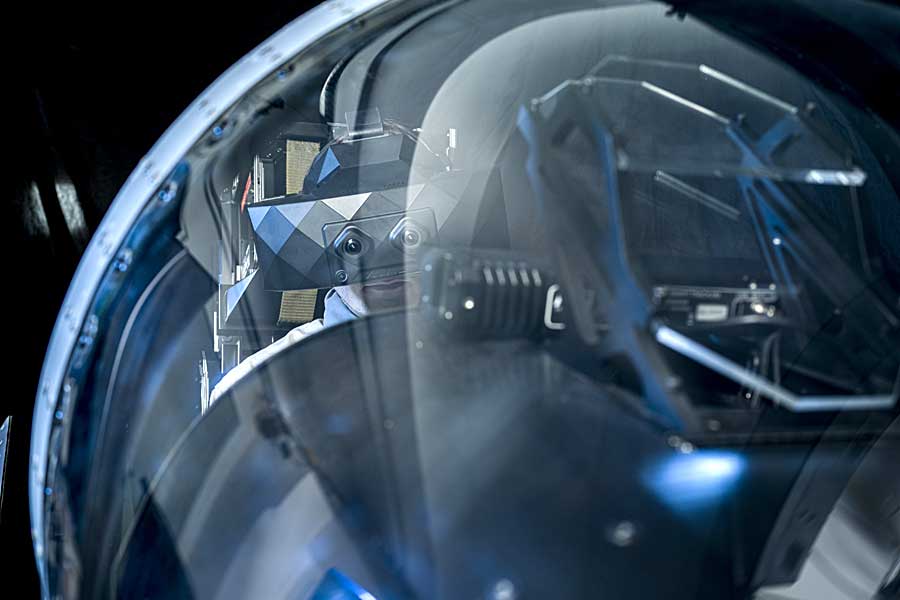

Vrgineers and Advanced Realtime Tracking demonstrate the combination of XTAL 3 headset and SMARTTRACK3/M in a mixed reality pilot trainer. The partnership between these two technological companies started in 2018. At IT2EC 2023 in Rotterdam, the integrated SMARTTRACK3/M into an F-35-like Classroom Trainer manufactured and delivered to USAF and RAF will be for display. This unique combination of the latest ART infrared all-in-one hardware and Vrgineers algorithms for cockpit motion compensation creates an unseen immersion for every mixed reality training. One of the challenges in next-generation pilot training using virtual technology and motion platforms is the alignment of the pilot’s position in the cockpit. By overcoming this issue, the simulator industry is moving forward to eliminate the disadvantages of simulated training.

“We are continuously working on removing the technological challenges of modern simulators, one of which is caused by front-facing camera position distance from users’ eyes. We are developing advanced algorithms for motion compensation to minimize the shift between virtual and physical scene, making experience realistic. The durability and compact size of SMARTTRACK3/M, which was optimized for using in cockpits, allows us as training device integrator to make it a comprehensive part of a simulation,” says Marek Polcak, CEO of Vrgineers.

“This is the application SMARTTRACK3/M was designed for., We have taken the proven hardware from the SMARTTRACK3 and adapted it to the limited space available. As a result, we have the precision and the reliability of a seasoned system in a form factor fitting to simulator cockpits” says Andreas Werner, business development manager for simulations at ART.