With generative AI models, researchers combined robotics data from different sources to help robots learn better. MIT researchers developed a technique to combine robotics training data across domains, modalities, and tasks using generative AI models. They create a combined strategy from several different datasets that enables a robot to learn to perform new tasks in unseen environments.

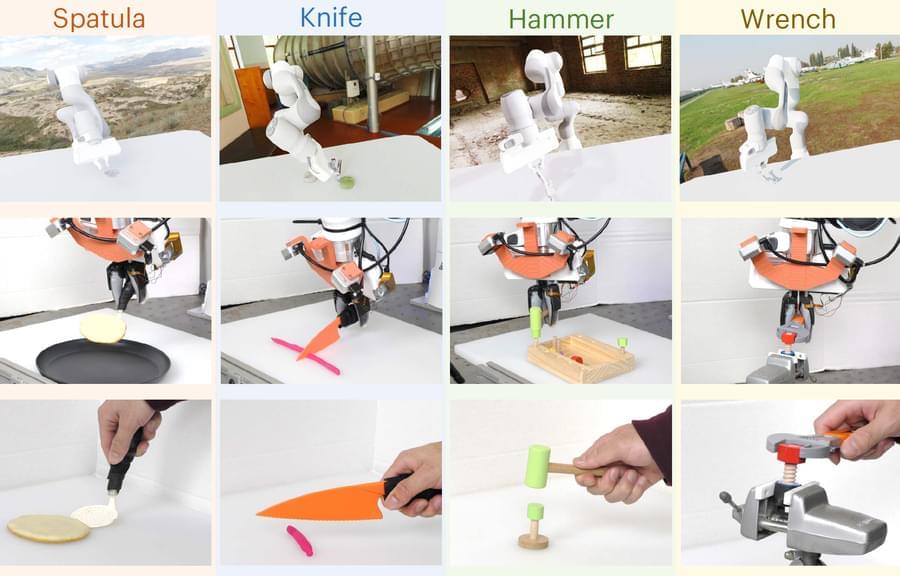

Let’s say you want to train a robot so it understands how to use tools and can then quickly learn to make repairs around your house with a hammer, wrench, and screwdriver. To do that, you would need an enormous amount of data demonstrating tool use.

Existing robotic datasets vary widely in modality — some include color images while others are composed of tactile imprints, for instance. Data could also be collected in different domains, like simulation or human demos. And each dataset may capture a unique task and environment.