🧠 New Graph Neural Network Technique 🔥

NVIDIA researchers developed WholeGraphStor, a novel #GNN memory optimization.

Storing entire graphs in a compressed format reduces memory footprint,…

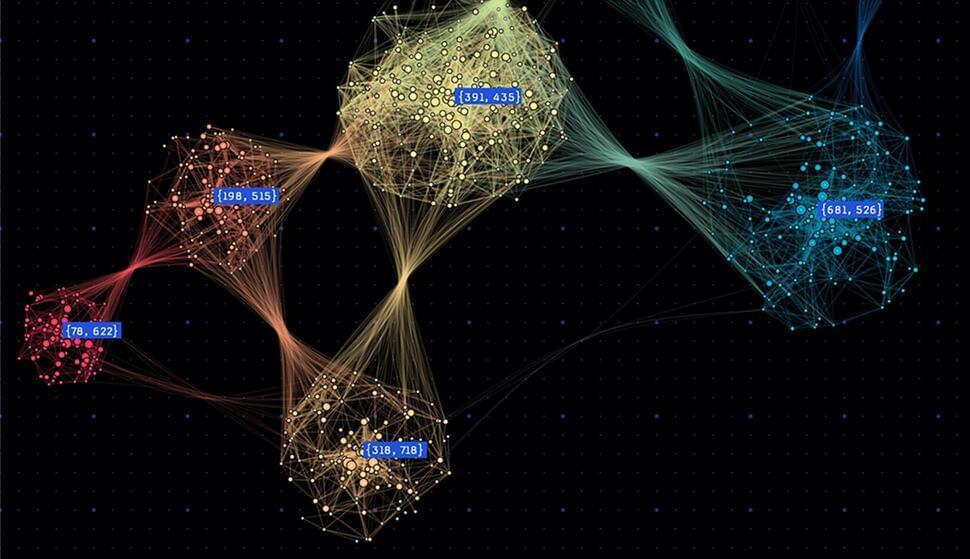

Graph neural networks (GNNs) have revolutionized machine learning for graph-structured data. Unlike traditional neural networks, GNNs are good at capturing intricate relationships in graphs, powering applications from social networks to chemistry. They shine particularly in scenarios like node classification, where they predict labels for graph nodes, and link prediction, where they determine the presence of edges between nodes.

Processing large graphs in a single forward or backward pass can be computationally expensive and memory-intensive.

The workflow for large-scale GNN training typically starts with subgraph sampling to use mini-batch training. This entails feature gathering to capture needed contextual information in a subgraph. Following these, the extracted features and subgraphs are employed in neural network training. This stage is where GNNs showcase proficiency in aggregating information and enabling the iterative propagation of node knowledge.