Artificial intelligence and machine learning have made tremendous progress in the past few years including the recent launch of ChatGPT and art generators, but one thing that is still outstanding is an energy-efficient way to generate and store long-and short-term memories at a form factor that is comparable to a human brain. A team of researchers in the McKelvey School of Engineering at Washington University in St. Louis has developed an energy-efficient way to consolidate long-term memories on a tiny chip.

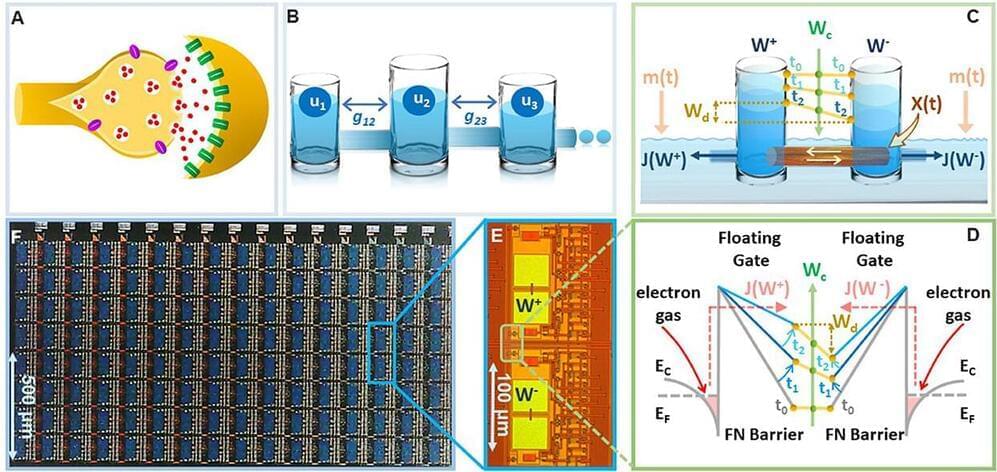

Shantanu Chakrabartty, the Clifford W. Murphy Professor in the Preston M. Green Department of Electrical & Systems Engineering, and members of his lab developed a relatively simple device that mimics the dynamics of the brain’s synapses, connections between neurons that allows signals to pass information. The artificial synapses used in many modern AI systems are relatively simple, whereas biological synapses can potentially store complex memories due to an exquisite interplay between different chemical pathways.

Chakrabartty’s group showed that their artificial synapse could also mimic some of these dynamics that can allow AI systems to continuously learn new tasks without forgetting how to perform old tasks. Results of the research were published Jan. 13 in Frontiers in Neuroscience.

Comments are closed.