Last month, we showed an earlier version of this robot where we’d trained its vision system using domain randomization, that is, by showing it simulated objects with a variety of color, backgrounds, and textures, without the use of any real images.

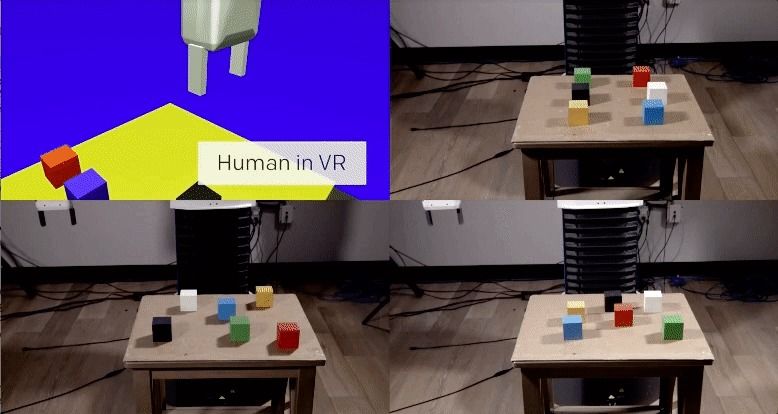

Now, we’ve developed and deployed a new algorithm, one-shot imitation learning, allowing a human to communicate how to do a new task by performing it in VR. Given a single demonstration, the robot is able to solve the same task from an arbitrary starting configuration.

Caption: Our system can learn a behavior from a single demonstration delivered within a simulator, then reproduce that behavior in different setups in reality.

Caption: Our system can learn a behavior from a single demonstration delivered within a simulator, then reproduce that behavior in different setups in reality.

Caption: Our system can learn a behavior from a single demonstration delivered within a simulator, then reproduce that behavior in different setups in reality.